Quick Start for Linux

In this quick start we will set up a Netidx resolver server and related tools on your local machine. This configuration is sufficient for doing development of netidx services and for trying out various publishers, subscribers, and tools without much setup.

First Install Rust and Netidx

Install rust via rustup if you haven't already. Ensure cargo is in your and then run,

cargo install netidx-tools

if you are on Max OS you must use,

cargo install --no-default-features netidx-tools

This will build and install the netidx command, which contains all

the built in command line tools necessary to run to the resolver

server, as well as the publisher/subscriber command line tools

You will need some build dependencies,

- libclang, necessary for bindgen, on debian/ubuntu

sudo apt install libclang-dev - gssapi, necessary for kerberos support, on debian/ubuntu

sudo apt install libkrb5-dev

Resolver Server Configuration

{

"parent": null,

"children": [],

"member_servers": [

{

"pid_file": "",

"addr": "127.0.0.1:4564",

"max_connections": 768,

"hello_timeout": 10,

"reader_ttl": 60,

"writer_ttl": 120,

"auth": {

"Local": "/tmp/netidx-auth"

}

}

],

"perms": {

"/": {

"wheel": "swlpd",

"adm": "swlpd",

"domain users": "sl"

}

}

}

Install the above config in

~/.config/netidx/resolver.json. This is the config for the

local resolver on your machine. Make sure port 4564 is free, or change

it to a free port of your choosing. If necessary you can change the

local auth socket to one of your choosing.

run netidx resolver-server -c ~/.config/netidx/resolver.json. This command will return

immediatly, and the resolver server will daemonize. Check that it's

running using ps auxwww | grep netidx.

NOTE, the resolver server does not currently support Windows.

Systemd

If desired you can start the resolver server automatically with systemd.

[Unit]

Description=Netidx Activation

[Service]

ExecStart=/home/eric/.cargo/bin/netidx resolver-server -c /home/eric/.config/resolver.json -f

[Install]

WantedBy=default.target

Modify this example systemd unit to match your configuration and then

install it in ~/.config/systemd/user/netidx.service. Then you can run

systemctl --user enable netidx

and

systemctl --user start netidx

Client Configuration

{

"addrs":

[

["127.0.0.1:4564", {"Local": "/tmp/netidx-auth"}]

],

"base": "/"

}

Install the above config in ~/.config/netidx/client.json. This is

the config all netidx clients (publishers and subscribers) will use to

connect to the resolver cluster.

- On Mac OS replace

~/.config/netidxwith~/Library/Application Support/netidx. - On Windows replace

~/.config/netidxwith~\AppData\Roaming\netidx(that's{FOLDERID_RoamingAppData}\netidx)

To test the configuration run,

netidx stress -a local publisher -b 127.0.0.1/0 --delay 1000 1000 10

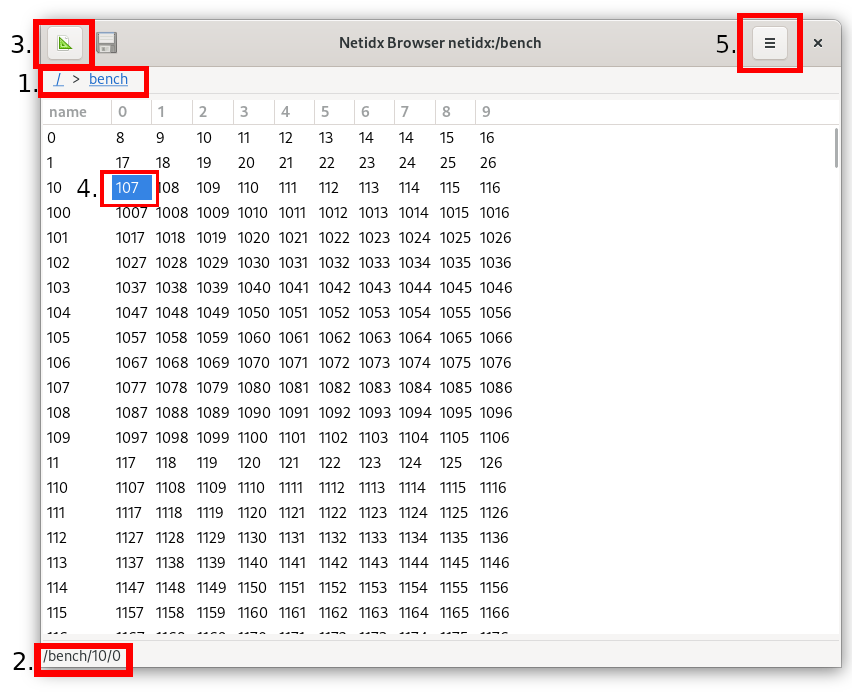

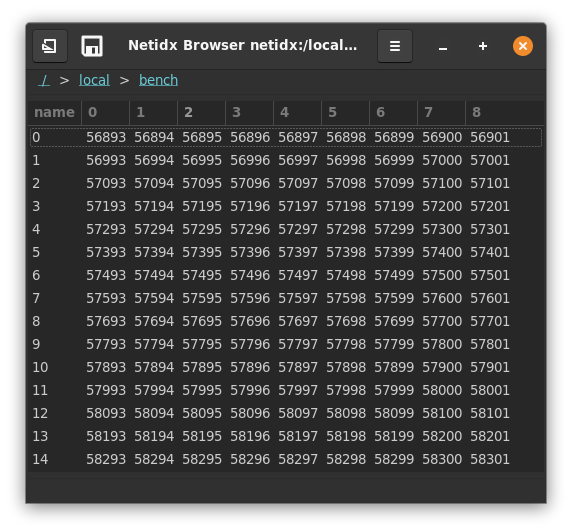

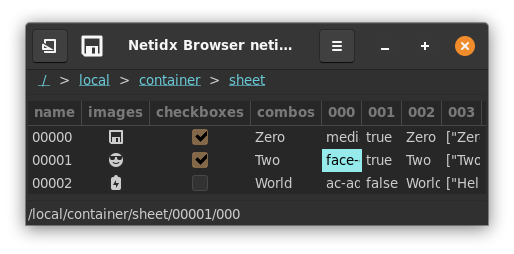

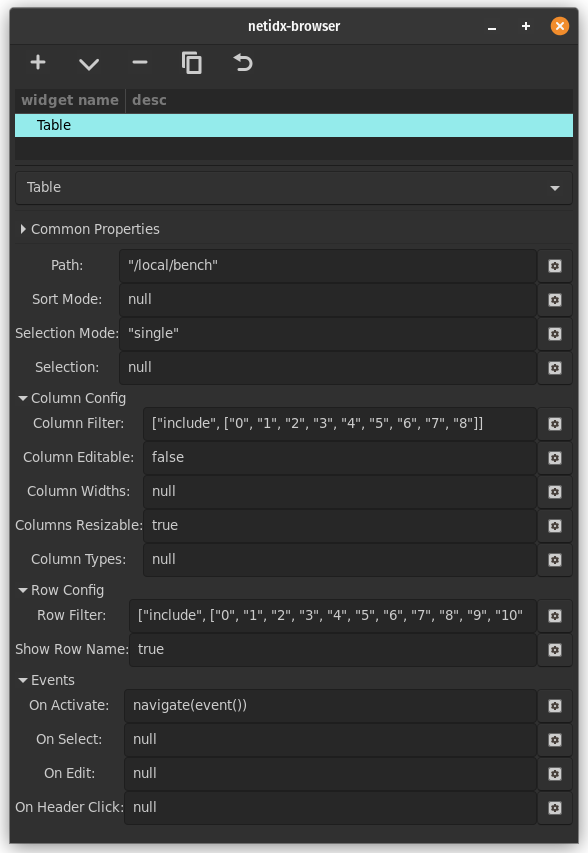

This will publish 10,000 items following the pattern /bench/$r/$c

where $r is a row number and $c is a column

number. e.g. /bench/100/8 corresponds to row 100 column 8. The

browser will draw this as a table with 1000 rows and 10 columns,

however for this test we will use the command line subscriber to look

at one cell in the table.

netidx subscriber -a local /bench/0/0

should print out one line like this every second

/bench/0/0|v64|1

The final number should increment, and if that works then netidx is

set up on your local machine. If it didn't work, try setting the

environment variable RUST_LOG=debug and running the stress publisher

and the subscriber again.

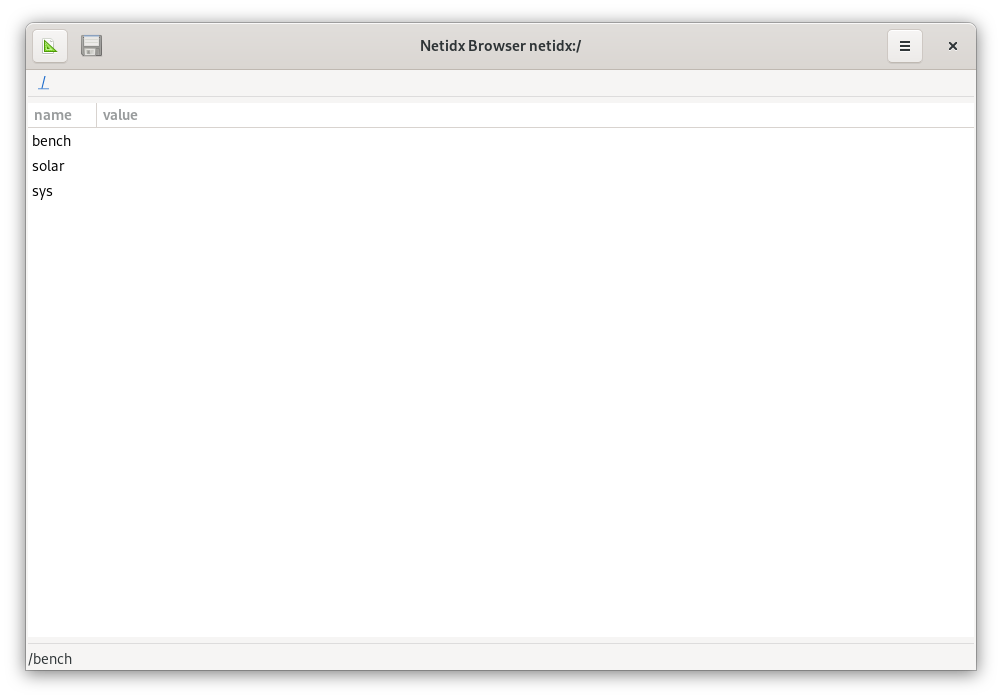

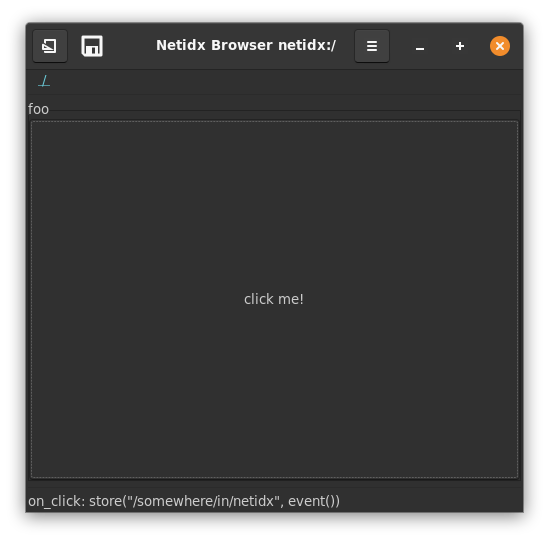

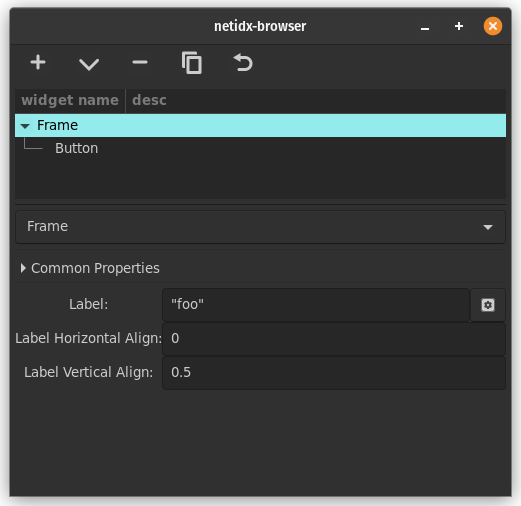

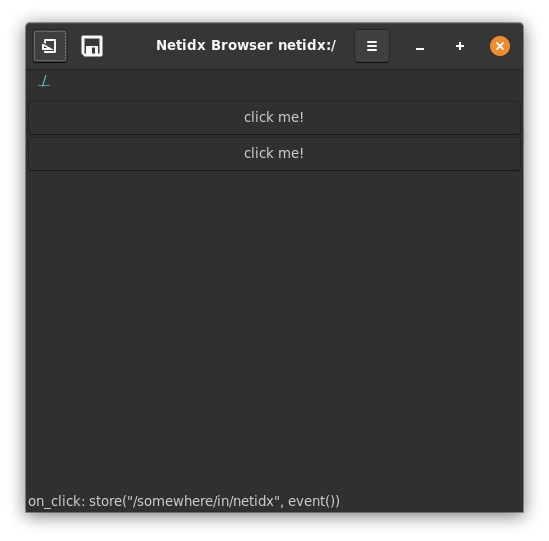

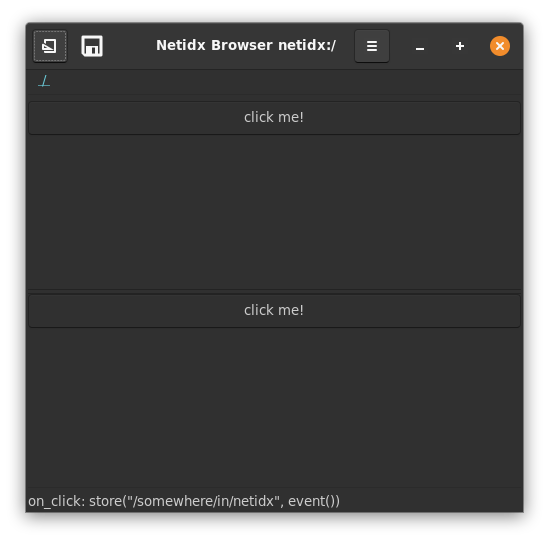

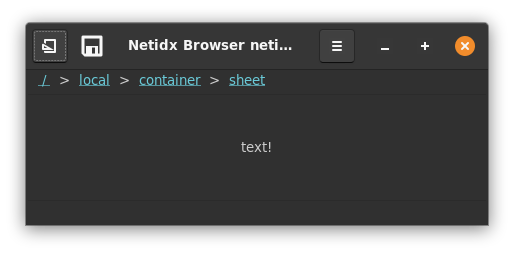

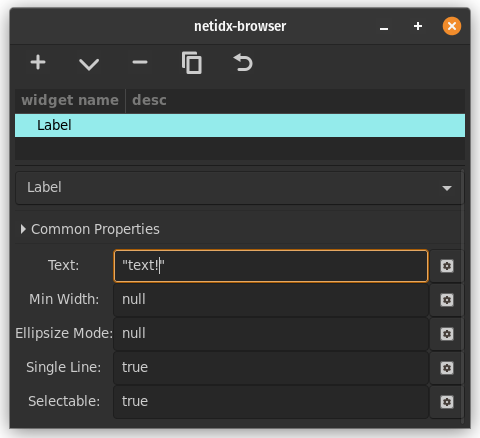

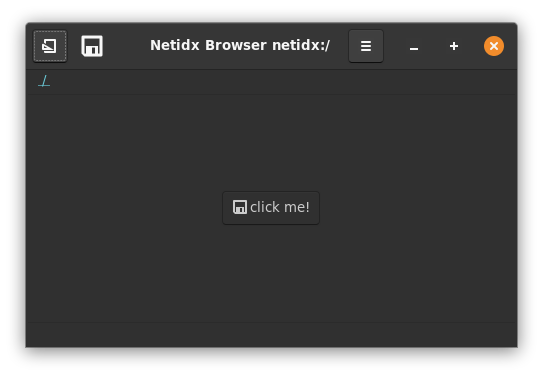

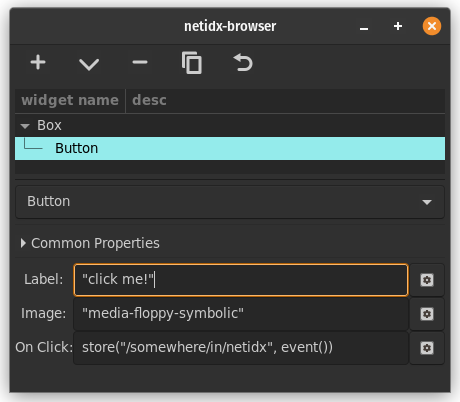

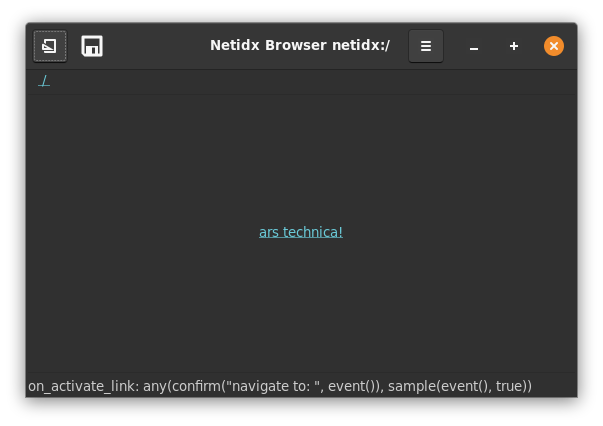

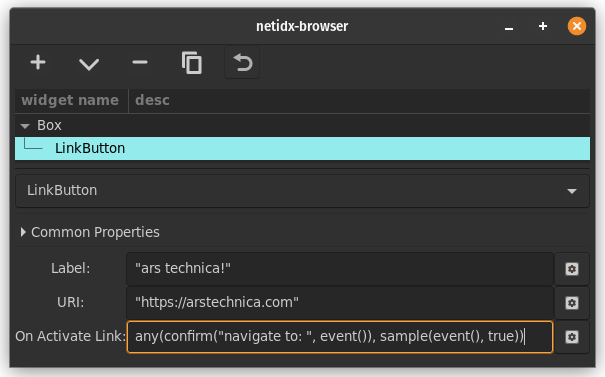

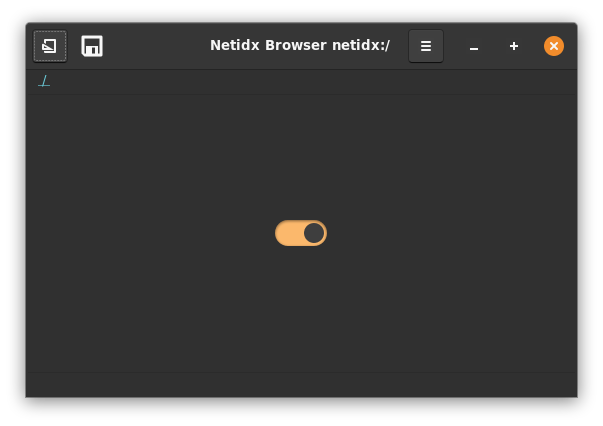

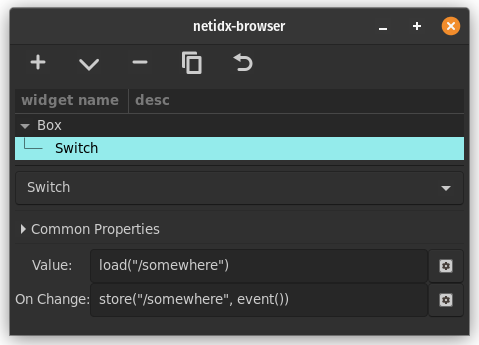

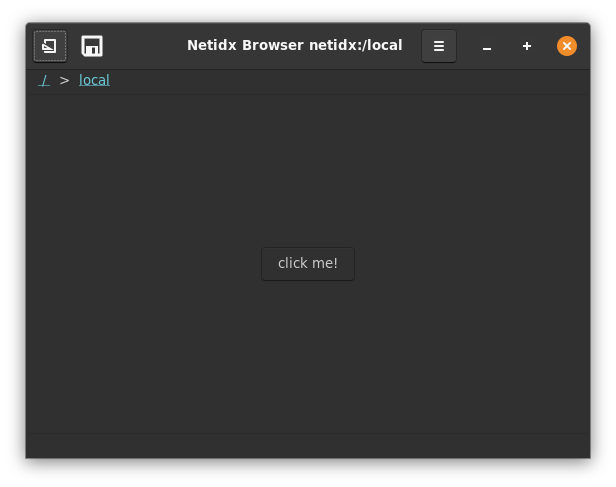

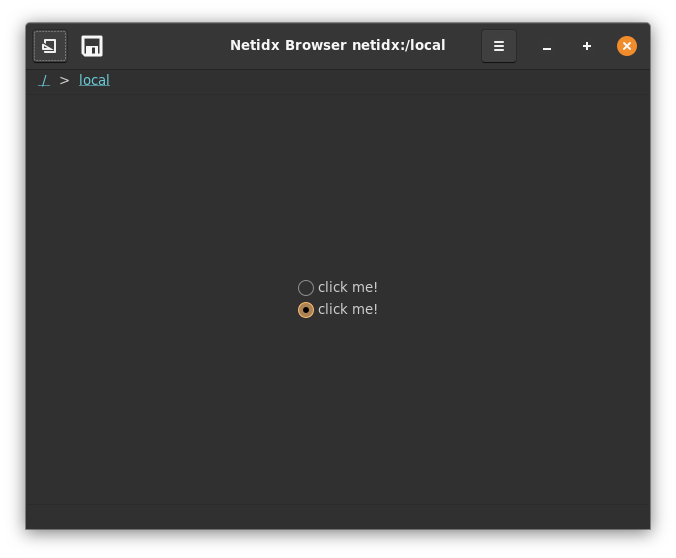

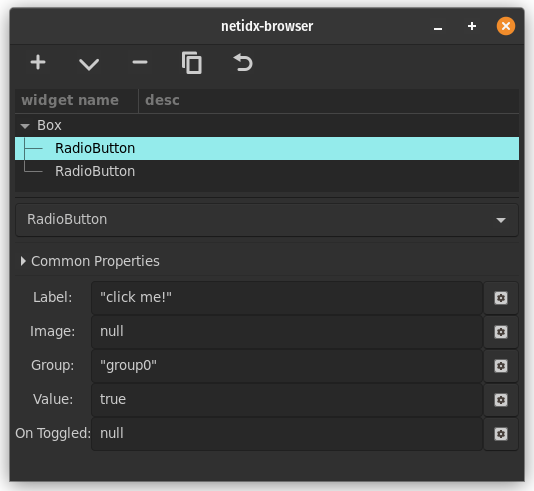

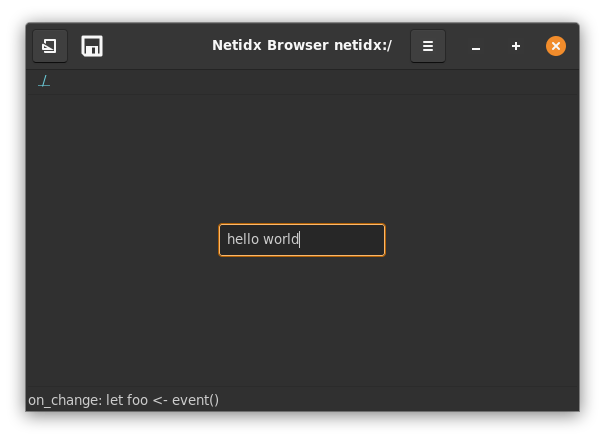

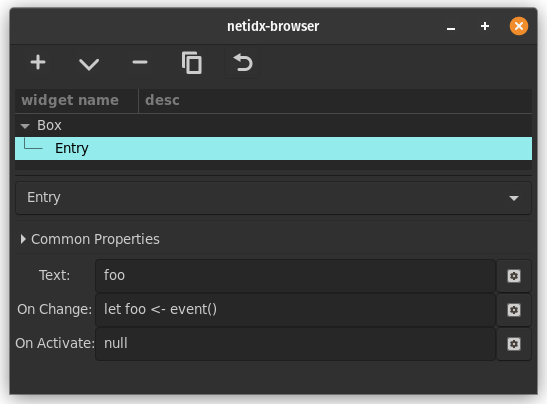

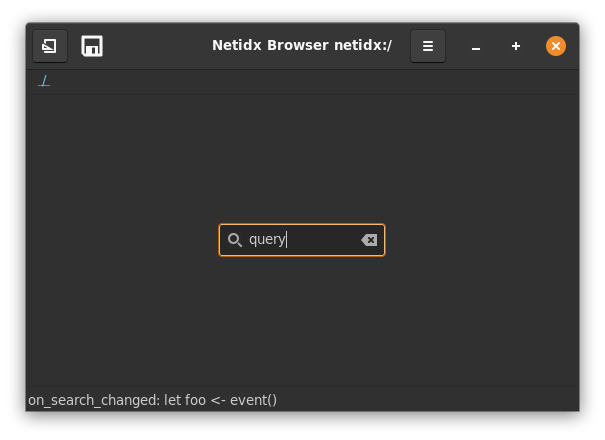

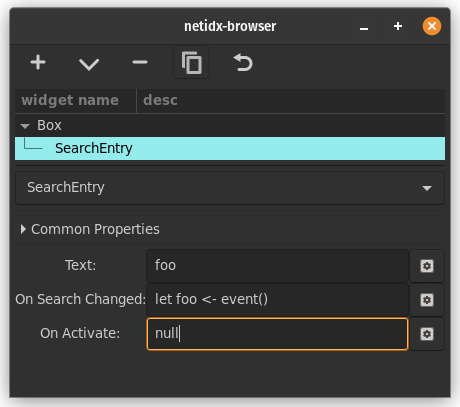

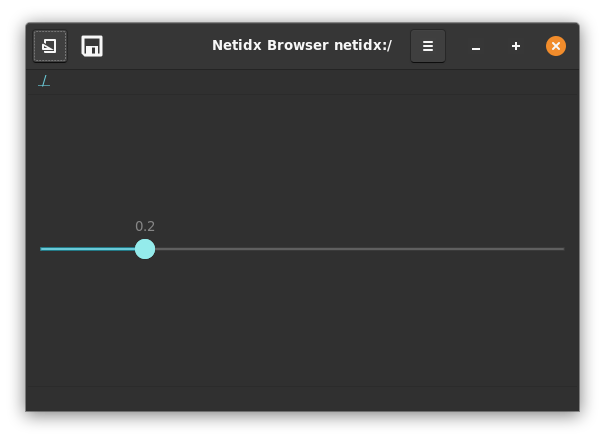

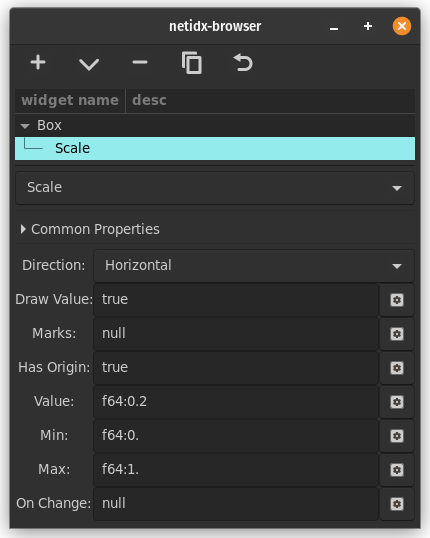

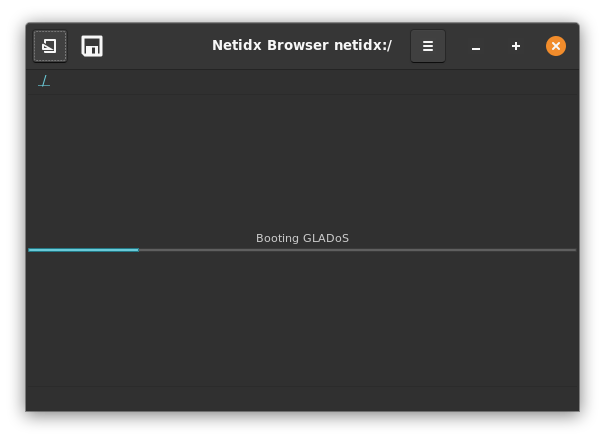

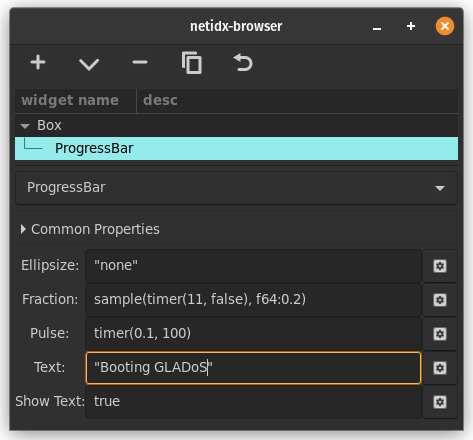

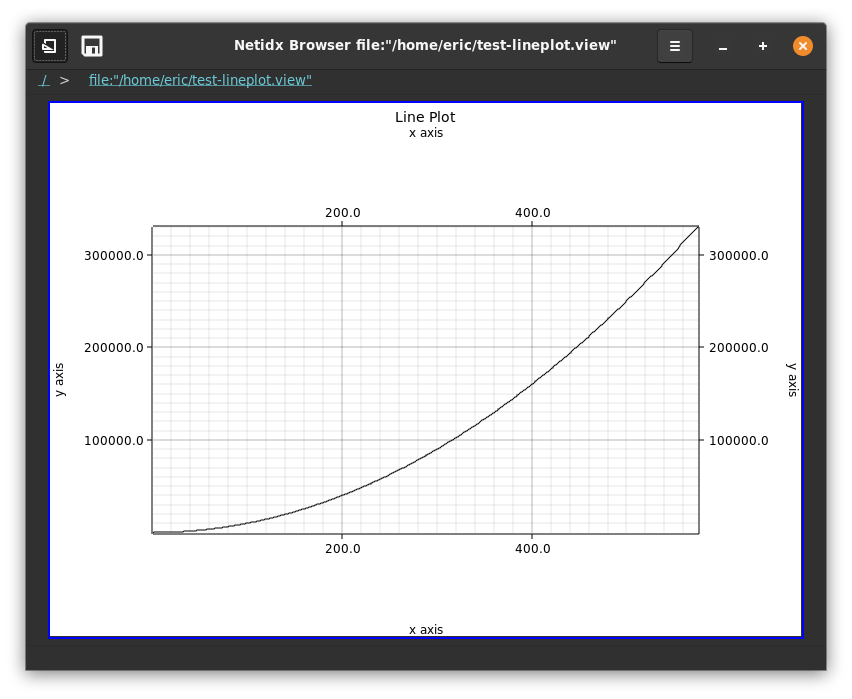

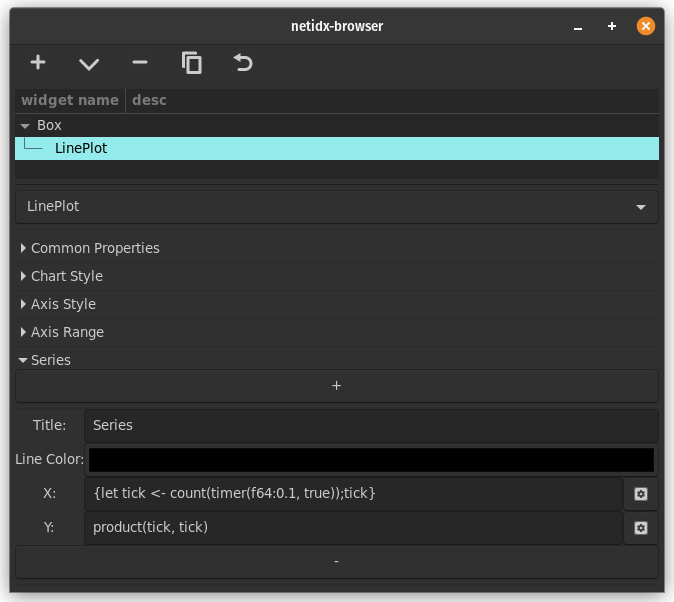

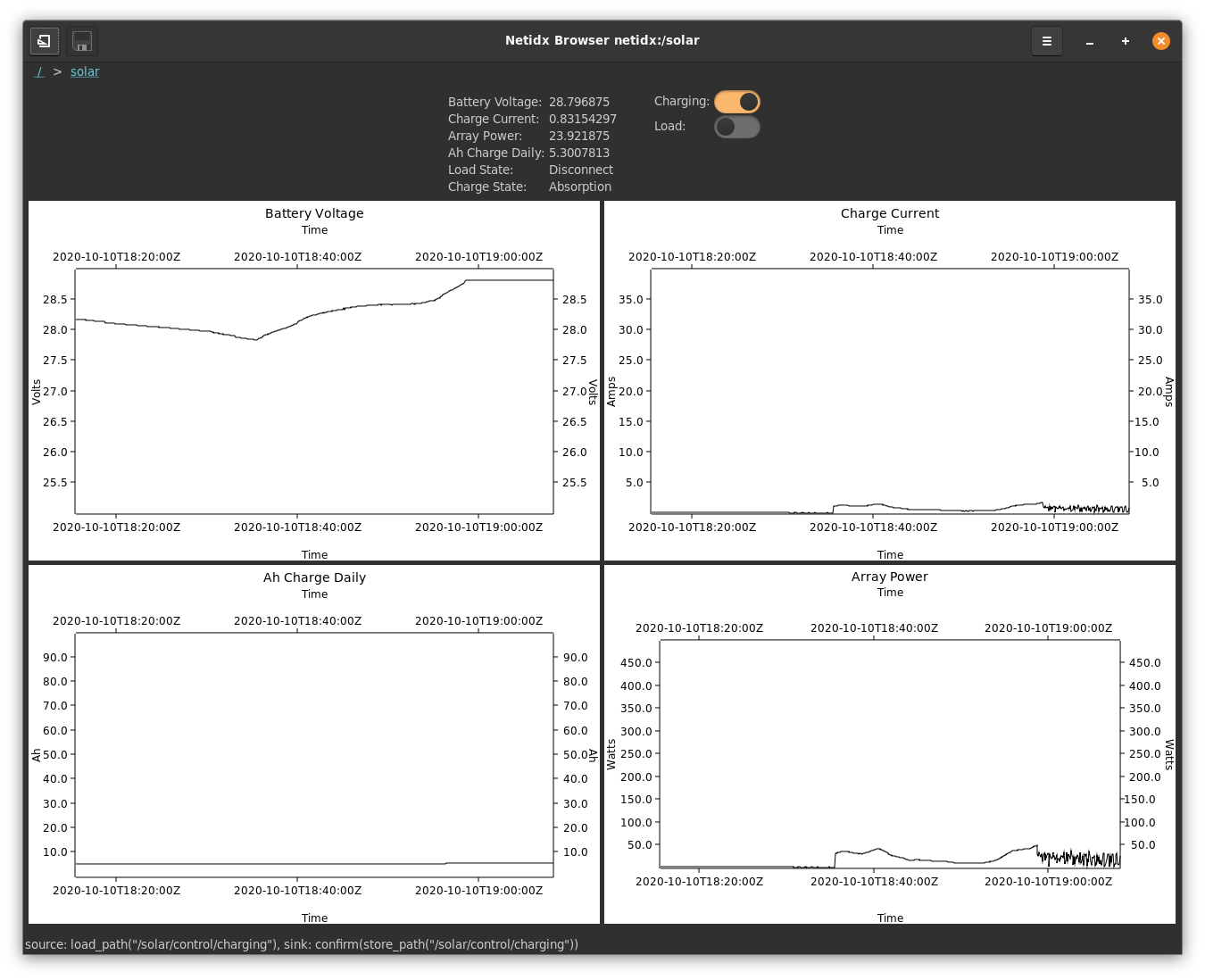

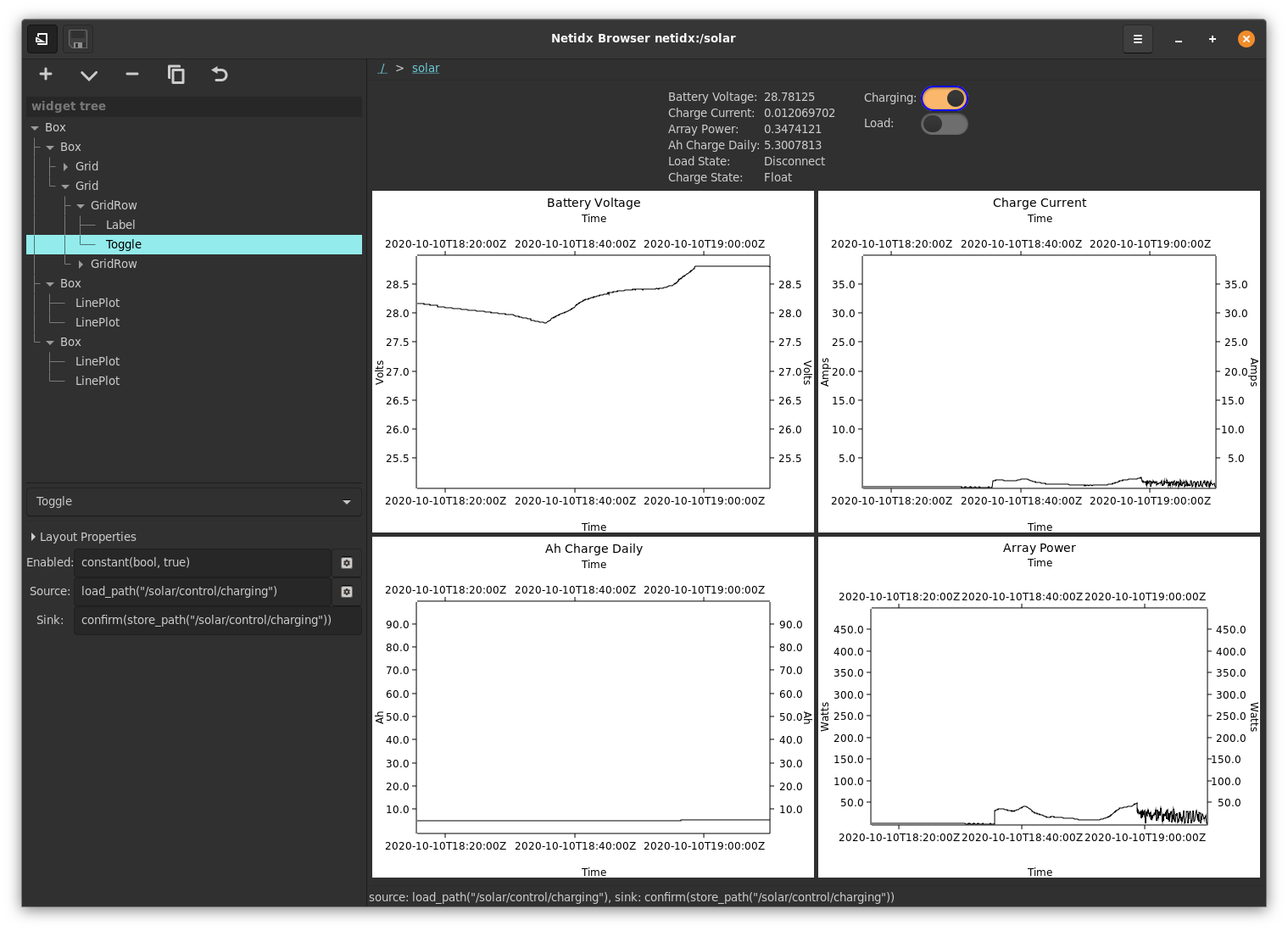

Optional Netidx Browser

The browser is an optional gui browser for the netidx tree, you need gtk development files installed to build it, on debian/ubuntu add those with

sudo apt install libgtk-3-dev

and then

cargo install netidx-browser

What is Netidx

Netidx is middleware that enables publishing a value, like 42, in one program and consuming it in another program, either on the same machine or across the network.

Values are given globally unique names in a hierarchical namespace. For example our published 42 might be named /the-ultimate-answer (normally we wouldn't put values directly under the root, but in this case it's appropriate). Any other program on the network can refer to 42 by that name, and will receive updates in the (unlikely) event that /the-ultimate-answer changes.

Comparison With Other Systems

-

Like LDAP

- Netidx keeps track of a hierarchical directory of values

- Netidx is browsable and queryable to some extent

- Netidx supports authentication, authorization, and encryption

- Netidx values can be written as well as read.

- Larger Netidx systems can be constructed by adding referrals between smaller systems. Resolver server clusters may have parents and children.

-

Unlike LDAP

- In Netidx the resolver server (like slapd) only keeps the location of the publisher that has the data, not the data iself.

- There are no 'entries', 'attributes', 'ldif records', etc. Every name in the system is either structural, or a single value. Entry like structure is created using hierarchy. As a result there is also no schema checking.

- One can subscribe to a value, and will then be notified immediatly if it changes.

- There are no global filters on data, e.g. you can't query for (&(cn=bob)(uid=foo)), because netidx isn't a database. Whether and what query mechanisms exist are up to the publishers. You can, however, query the structure, e.g. /foo/**/bar would return any path under foo that ends in bar.

-

Like MQTT

- Netidx values are publish/subscribe

- A single Netidx value may have multiple subscribers

- All Netidx subscribers receive an update when a value they are subscribed to changes.

- Netidx Message delivery is reliable and ordered.

-

Unlike MQTT

- In Netidx there is no centralized message broker. Messages flow directly over TCP from the publishers to the subscribers. The resolver server only stores the address of the publisher/s publishing a value.

The Namespace

Netidx values are published to a hierarchical tuple space. The structure of the names look just like a filename, e.g.

/apps/solar/stats/battery_sense_voltage

Is an example name. Unlike a file name, a netidx name may point to a value, and also have children. So keeping the file analogy, it can be both a file and a directory. For example we might have,

/apps/solar/stats/battery_sense_voltage/millivolts

Where the .../battery_sense_voltage is the number in volts, and it's

'millivolts' child gives the same number in millivolts.

Sometimes a name like battery_sense_voltage is published deep in the

hierarchy and it's parents are just structure. Unlike the file system

the resolver server will create and delete those structural containers

automatically, there is no need to manually manage them.

When a client wants to subscribe to a published value, it queries the resolver server cluster, and is given the addresses of all the publishers that publish the value. Multiple publishers can publish the same value, and the client will try all of them in a random order until it finds one that works. All the actual data flows from publishers to subscribers directly without ever going through any kind of centralized infrastructure.

The Data Format

In Netidx the data that is published is called a value. Values are mostly primitive types, consisting of numbers, strings, durations, timestamps, packed byte arrays, and arrays of values. Arrays of values can be nested.

Byte arrays and strings are zero copy decoded, so they can be a building block for sending other encoded data efficiently.

Published values have some other properties as well,

- Every non structural name points to a value

- Every new subscription immediately delivers it's most recent value

- When a value is updated, every subscriber receives the new value

- Updates arrive reliably and in the order the publisher made them (like a TCP stream)

Security

Netidx currently supports three authentication mechanisms, Kerberos v5, Local, and Tls. Local applies only on the same machine (and isn't supported on Windows), while many organizations already have Kerberos v5 deployed in the form of Microsoft Active Directory, Samba ADS, Redhat Directory Server, or one of the many other compatible solutions. Tls requires each participant in netidx (resolver server, subscriber, publisher) to have a certificate issued by a certificate authority that the others it wants to interact with trust.

Security is optional in netidx, it's possible to deploy a netidx system with no security at all, or it's possible to deploy a mixed system where only some publishers require security, with some restrictions.

- If a subscriber is configured with security, then it won't talk to publishers that aren't.

- If a publisher is configured with security, then it won't talk to a subscriber that isn't.

When security is enabled, regardless of which of the three mechanisms you get the following guarantees,

-

Mutual Authentication, the publisher knows the subscriber is who they claim to be, and the subscriber knows the publisher is who they claim to be. This applies for the resolver <-> subscriber, and resolver <-> publisher as well.

-

Confidentiality and Tamper detection, all messages are encrypted if they will leave the local machine, and data cannot be altered undetected by a man in the middle.

-

Authorization, The user subscribing to a given data value is authorized to do so. The resolver servers maintain a permissions database specifying who is allowed to do what where in the tree. Thus the system administrator can centrally control who is allowed to publish and subscribe where.

Cross Platform

While netidx is primarily developed on Linux, it has been tested on Windows, and Mac OS.

Scale

Netidx has been designed to support single namespaces that are pretty large. This is done by allowing delegation of subtrees to different resolver clusters, which can be done to an existing system without disturbing running publishers or subscribers. Resolver clusters themselves can also have a number of replicas, with read load split between them, further augmenting scaling.

At the publisher level, multiple publishers may publish the same name. When a client subscribes it will randomly pick one of them. This property can be used to balance load on an application, so long as the publishers syncronize with each other.

Publishing vmstat

In this example we build a shell script to publish the output of the venerable vmstat tool to netidx.

#! /bin/bash

BASE="/sys/vmstat/$HOSTNAME"

vmstat -n 1 | \

while read running \

blocked \

swapped \

free \

buf \

cache \

swap_in \

swap_out \

blocks_in \

blocks_out \

interrupts \

context_switches \

user \

system \

idle \

waitio \

stolen

do

echo "${BASE}/running|z32|${running}"

echo "${BASE}/blocked|z32|${blocked}"

echo "${BASE}/swapped|z32|${swapped}"

echo "${BASE}/free|u64|${free}"

echo "${BASE}/buf|u64|${buf}"

echo "${BASE}/cache|u64|${cache}"

echo "${BASE}/swap_in|z32|${swap_in}"

echo "${BASE}/swap_out|z32|${swap_out}"

echo "${BASE}/blocks_in|z32|${blocks_in}"

echo "${BASE}/blocks_out|z32|${blocks_out}"

echo "${BASE}/interrupts|z32|${interrupts}"

echo "${BASE}/context_switches|z32|${context_switches}"

echo "${BASE}/user|z32|${user}"

echo "${BASE}/system|z32|${system}"

echo "${BASE}/idle|z32|${idle}"

echo "${BASE}/waitio|z32|${waitio}"

echo "${BASE}/stolen|z32|${stolen}"

done | \

netidx publisher --spn publish/${HOSTNAME}@RYU-OH.ORG --bind 192.168.0.0/24

Lets dissect this pipeline of three commands into it's parts. First

vmstat itself, if you aren't familiar with it, is part of the procps

package on debian, which is a set of fundamental unix tools like

pkill, free, and w which would have been familiar to sysadmins

in the 80s.

vmstat -n 1

prints output like this

eric@ken-ohki:~$ vmstat -n 1

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

2 0 279988 4607136 1194752 19779448 0 0 5 48 14 8 15 6 79 0 0

0 0 279988 4605104 1194752 19780728 0 0 0 0 1800 3869 4 1 95 0 0

0 0 279988 4605372 1194752 19780632 0 0 0 0 1797 4175 3 1 96 0 0

0 0 279988 4604104 1194752 19781672 0 0 0 0 1982 4570 4 1 95 0 0

0 0 279988 4604112 1194752 19780648 0 0 0 0 1941 4690 3 2 95 0 0

-n means only print the header once, and 1 means print a line

every second until killed. Next we pass these lines to a shell while

loop that reads the line using the builtin read command into a shell

variable for each field. The field names were changed to be more

descriptive. In the body of the while loop we echo a path|typ|value

triple for each field. e.g. if we don't run the final pipe to netidx publisher the output of the while loop looks something like this.

/sys/vmstat/ken-ohki.ryu-oh.org/running|z32|1

/sys/vmstat/ken-ohki.ryu-oh.org/blocked|z32|0

/sys/vmstat/ken-ohki.ryu-oh.org/swapped|z32|279988

/sys/vmstat/ken-ohki.ryu-oh.org/free|u64|4644952

/sys/vmstat/ken-ohki.ryu-oh.org/buf|u64|1194896

/sys/vmstat/ken-ohki.ryu-oh.org/cache|u64|19775864

/sys/vmstat/ken-ohki.ryu-oh.org/swap_in|z32|0

/sys/vmstat/ken-ohki.ryu-oh.org/swap_out|z32|0

/sys/vmstat/ken-ohki.ryu-oh.org/blocks_in|z32|5

/sys/vmstat/ken-ohki.ryu-oh.org/blocks_out|z32|48

/sys/vmstat/ken-ohki.ryu-oh.org/interrupts|z32|14

/sys/vmstat/ken-ohki.ryu-oh.org/context_switches|z32|9

/sys/vmstat/ken-ohki.ryu-oh.org/user|z32|15

/sys/vmstat/ken-ohki.ryu-oh.org/system|z32|6

/sys/vmstat/ken-ohki.ryu-oh.org/idle|z32|79

/sys/vmstat/ken-ohki.ryu-oh.org/waitio|z32|0

/sys/vmstat/ken-ohki.ryu-oh.org/stolen|z32|0

No surprise that this is the exact format netidx publisher requires

to publish a value, so the final command in the pipeline is just

netidx publisher consuming the output of the while loop.

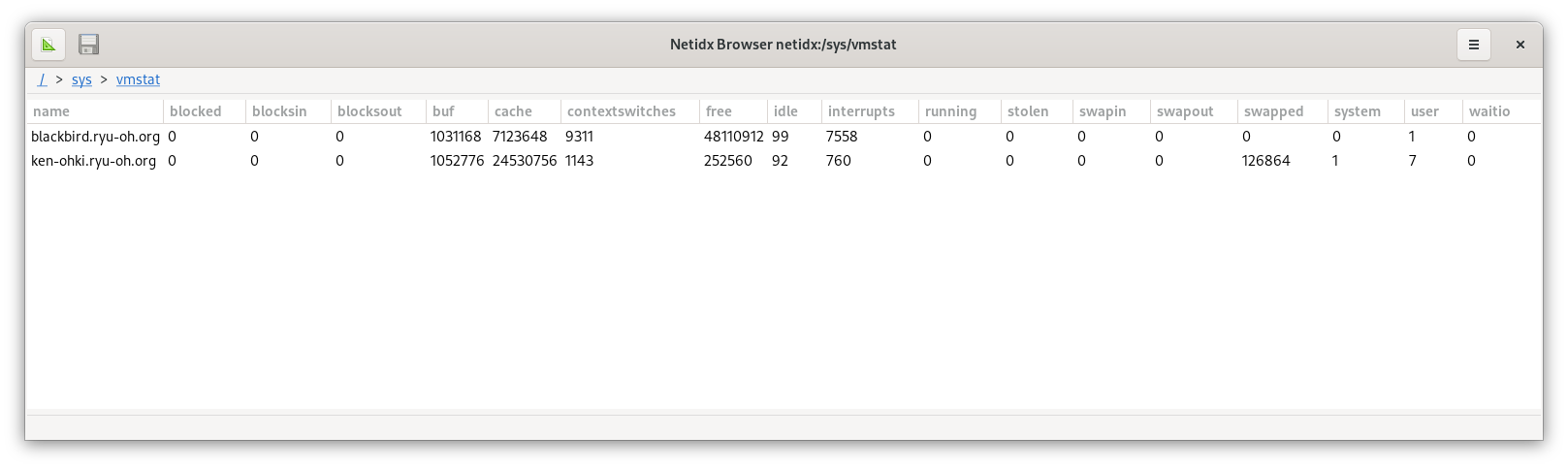

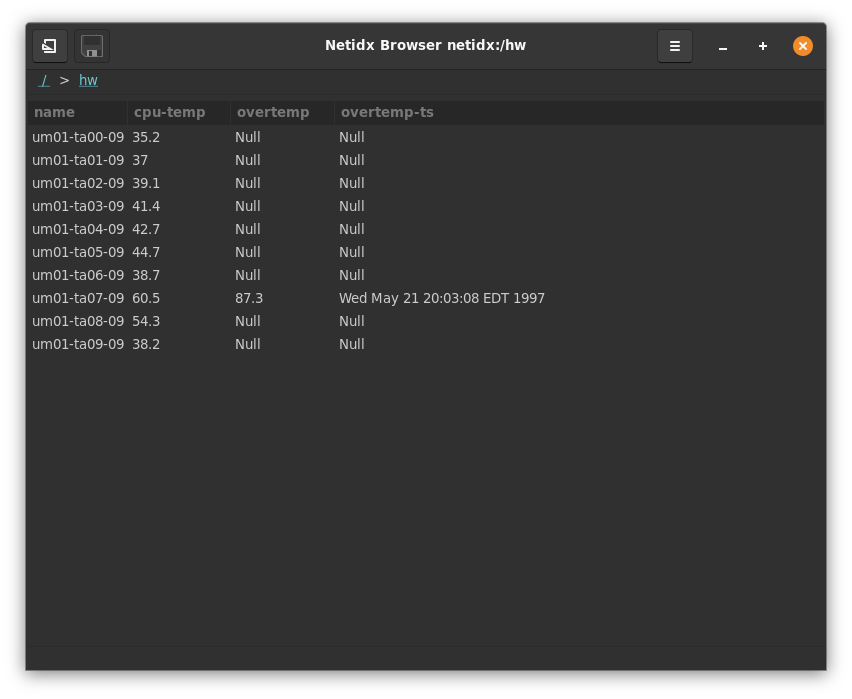

Running this on two of my systems results in a table viewable in the browser with two rows,

Because of the way the browser works, our regular tree structure is

automatically turned into a table with a row for each host, and a

column for each vmstat field. So we've made something that's

potentially useful to look at with very little effort. There are many

other things we can now do with this data, for example we could use

netidx record to record the history of vmstat on these machines, we

could subscribe, compute an aggregate, and republish it, or we could

sort by various columns in the browser. How about we have some fun and

pretend that all the machines running the script are part of an

integrated cluster, as if it was that easy, and so we want a total

vmstat for the cluster.

#! /bin/bash

BASE='/sys/vmstat'

declare -A TOTALS

declare -A HOSTS

netidx resolver list -w "${BASE}/**" | \

grep -v --line-buffered "${BASE}/total/" | \

sed -u -e 's/^/ADD|/' | \

netidx subscriber | \

while IFS='|' read -a input

do

IFS='/' path=(${input[0]})

host=${path[-2]}

field=${path[-1]}

if ! test -z "$host" -o -z "$field"; then

HOSTS[$host]="$host"

TOTALS["$host/$field"]=${input[2]}

T=0

for h in ${HOSTS[@]}

do

((T+=TOTALS["$h/$field"]))

done

echo "${BASE}/total/$field|${input[1]}|$T"

fi

done | netidx publisher --spn publish/${HOSTNAME}@RYU-OH.ORG --bind 192.168.0.0/24

Lets dissect this script,

netidx resolve list -w "${BASE}/**"

This lists everything under /sys/vmstat recursively, and instead of

exiting after doing that, it keeps polling every second, and if a new

thing shows up that matches the glob it lists the new thing. The

output is just a list of paths, e.g.

...

/sys/vmstat/total/blocked

/sys/vmstat/total/buf

/sys/vmstat/total/system

/sys/vmstat/blackbird.ryu-oh.org/swap_out

/sys/vmstat/ken-ohki.ryu-oh.org/buf

/sys/vmstat/ken-ohki.ryu-oh.org/idle

...

The next two commands in the pipeline serve to filter out the total

row we are going to publish (don't want to recursively total things

right), and transform the remaining lines into commands to netidx subscriber that will cause it to add a subscription. e.g.

...

ADD|/sys/vmstat/ken-ohki.ryu-oh.org/waitio

ADD|/sys/vmstat/blackbird.ryu-oh.org/blocked

ADD|/sys/vmstat/blackbird.ryu-oh.org/context_switches

ADD|/sys/vmstat/blackbird.ryu-oh.org/free

...

The above is what gets fed into the netidx subscriber command. So in

a nutshell we've said subscribe to all the things anywhere under

/sys/vmstat that are present now, or appear in the future, and

aren't part of the total row. Subscriber prints a line for each

subscription update in the form of a PATH|TYP|VAL triple, e.g.

..

/sys/vmstat/ken-ohki.ryu-oh.org/swap_out|z32|0

/sys/vmstat/ken-ohki.ryu-oh.org/blocks_in|z32|0

/sys/vmstat/ken-ohki.ryu-oh.org/blocks_out|z32|16

/sys/vmstat/ken-ohki.ryu-oh.org/interrupts|z32|1169

/sys/vmstat/ken-ohki.ryu-oh.org/context_switches|z32|3710

/sys/vmstat/ken-ohki.ryu-oh.org/user|z32|3

/sys/vmstat/ken-ohki.ryu-oh.org/system|z32|1

/sys/vmstat/ken-ohki.ryu-oh.org/idle|z32|96

...

That gets passed into our big shell while loop, which uses the read

builtin to read each line into an array called input. So in the body

of each iteration of the the while loop the variable input will be

an array with contents e.g.

[/sys/vmstat/ken-ohki.ryu-oh.org/swap_out, v32, 25]

Indexed starting at 0 as is the convention in bash. We split the path

into an array called path, the last two elements of which are

important to us. The last element is the field (e.g. swap_out), and

the second to last is the host. Each line is an update to one field of

one host, and when a field of a host is updated we want to compute the

sum of that field for all the hosts, and then print the new total for

that field. To do this we need to remember each field for each host,

since only one field of one host gets updated at a time. For this we

use an associative array with a key of $host/$field, thats

TOTALS. We also need to remember all the host names, so that when we

are ready to compute our total, we can look up the field for every

host, that's HOSTS. Finally we pass the output of this while loop to

the publisher, and now we have a published total row.

Administration

First Things First

If you plan to use Kerberos make sure you have it set up properly, including your KDC, DNS, DHCP, etc. If you need help with kerberos I suggest the O'REILLY book. If you want something free the RedHat documentation isn't too bad, though it is somewhat specific to their product.

Problems with Kerberos/GSSAPI can often be diagnosed by setting

KRB5_TRACE=/dev/stderr, and/or RUST_LOG=debug. GSSAPI errors can

sometimes be less than helpful, but usually the KRB5_TRACE is more

informative.

Resources and Gotchas

-

Expect to use about 500 MiB of ram in the resolver server for every 1 million published values.

-

Both read and write operations will make use of all available logical processors on the machine. So, in the case you are hitting performance problems, try allocating more cores before taking more drastic segmentation steps.

-

Even when the resolvers are very busy they should remain fair. Large batches of reads or writes are broken into smaller reasonably sized batches for each logical processor. These batches are then interleaved pseudo randomly to ensure that neither reads nor writes are starved.

-

Be mindful of the maximum number of available file descriptors per process on the resolver server machine when setting max_connections. You can easily raise this number on modern linux systems using ulimit.

-

While the resolver server drops idle read client connections fairly quickly (default 60 seconds), if you have many thousands or tens of thousands of read clients that want to do a lot of reading simultaneously then you may need to raise the maximum number of file descriptors available, and/or deploy additional processes to avoid file descriptor exhaustion.

-

Some implementations of Krb5/GSSAPI keep a file descriptor open for every active client/server session, which in our case means every read client, but also every publisher, connected or not. This has been fixed in recent versions of MIT Kerberos (but may still manifest if you are running with KRB5_TRACE). Keep this in mind if you're seeing file descriptor exhaustion.

Resolver Server Configuration

Each resolver server cluster shares a configuration file. At startup time each member server is told it's zero based index in the list of member servers. Since the default is 0 the argument can be omitted if there is only one server in the cluster.

Here is an example config file for a resolver cluster that lives in the middle of a three level hierarchy. Above it is the root server, it is responsible for the /app subtree, and it delegates /app/huge0 and /app/huge1 to child servers.

{

"parent": {

"path": "/app",

"ttl": 3600,

"addrs": [

[

"192.168.0.1:4654",

{

"Krb5": "root/server@YOUR-DOMAIN"

}

]

]

},

"children": [

{

"path": "/app/huge0",

"ttl": 3600,

"addrs": [

[

"192.168.0.2:4654",

{

"Krb5": "huge0/server@YOUR-DOMAIN"

}

]

]

},

{

"path": "/app/huge1",

"ttl": 3600,

"addrs": [

[

"192.168.0.3:4654",

{

"Krb5": "huge1/server@YOUR-DOMAIN"

}

]

]

}

],

"member_servers": [

{

"pid_file": "/var/run/netidx",

"addr": "192.168.0.4:4564",

"max_connections": 768,

"hello_timeout": 10,

"reader_ttl": 60,

"writer_ttl": 120,

"auth": {

"Krb5": "app/server@YOUR-DOMAIN"

}

}

],

"perms": {

"/app": {

"wheel": "swlpd",

"adm": "swlpd",

"domain users": "sl"

}

}

}

parent

This section is either null if the cluster has no parent, or a record specfying

-

path: The path where this cluster attaches to the parent. For example a query for something in /tmp would result in a referral to the parent in the above example, because /tmp is not a child of /app, so this cluster isn't authoratative for /tmp. It's entirely posible that the parent isn't authoratative for /tmp either, in which case the client would get another referral upon querying the parent. This chain of referrals can continue until a maximum number is reached (to prevent infinite cycles).

-

ttl: How long, in seconds, clients should cache this parent. If for example you reconfigured it to point to another IP, clients might still try to go to the old ip for as long as the ttl.

-

addrs: The addresses of the servers in the parent cluster. This is a list of pairs of ip:port and auth mechanism. The authentication mechanism of the parent may not be Local, it must be either Anonymous or Krb5. In the case of Krb5 you must include the server's spn.

children

This section contains a list of child clusters. The format of each child is exactly the same as the parent section. The path field is the location the child attaches in the tree, any query at or below that path will be referred to the child.

member_servers

This section is a list of all the servers in this cluster. The fields on each server are,

-

id_map_command: Optional. The path to the command the server should run in order to map a user name to a user and a set of groups that user is a member of. Default is

/usr/bin/id. If a custom command is specified then it's output MUST be in the same format as the/usr/bin/idcommand. This command will be passed the name of the user as a single argument. Depending on the auth mechanism this "name" could be e.g.eric@RYU-OH.ORGfor kerberos, justericfor local auth, oreric.users.architect.comfor tls auth (it will pass the common name of the users' certificate) -

pid_file: the path to the pid file you want the server to write. The server id folowed by .pid will be appended to whatever is in this field. So server 0 in the above example will write it's pid to /var/run/netidx0.pid

-

addr: The socket address and port that this member server will report to clients. This should be it's public ip, the ip clients use to connect to it from the outside.

-

bind_addr: The socket address that the server will actually bind to on the local machine. This defaults to 0.0.0.0. In the case where you are behind a NAT, or some other contraption, you should set this to the private ip address corresponding to the interface you actually want to receive traffic on.

-

max_connections: The maximum number of simultaneous client connections that this server will allow. Client connections in excess of this number will be accepted and immediatly closed (so they can hopefully try another server).

-

hello_timeout: The maximum time, in seconds, that the server will wait for a client to complete the initial handshake process. Connections that take longer than this to handshake will be closed.

-

reader_ttl: The maximum time, in seconds, that the server will retain an idle read connection. Idle read connections older than this will be closed.

-

writer_ttl: The maximum time, in seconds, that the server will retain an idle write connection. Idle connections older than this will be closed, and all associated published data will be cleared. Publishers autoatically set their heartbeat interval to half this value. This is the maximum amount of time data from a dead publisher will remain in the resolver.

-

auth: The authentication mechanism used by this server. One of Anonymous, Local, Krb5, or Tls. Local must include the path to the local auth socket file that will be used to verify the identity of clients. Krb5 must include the server's spn. Tls must include the domain name of the server, the path to the trusted certificates, the server's certificate (it's CN must match the domain name), and the path to the server's private key. For example,

"Tls": { "name": "resolver.architect.com", "trusted": "trusted.pem", "certificate": "cert.pem", "private_key": "private.key" }The certificate

CNmust beresolver.architect.com. The may not be encrypted.

perms

The server perissions map. This will be covered in detail in the authorization chapter. If a member server's auth mechanism is anonymous, then this is ignored.

Client Configuration

Netidx clients such as publishers and subscribers try to load their configuration files from the following places in order.

- $NETIDX_CFG

- config_dir:

- on Linux: ~/.config/netidx/client.json

- on Windows: ~\AppData\Roaming\netidx\client.json

- on MacOS: ~/Library/Application Support/netidx/client.json

- global_dir

- on Linux: /etc/netidx/client.json

- on Windows: C:\netidx\client.json

- on MacOS: /etc/netix/client.json

Since the dirs crate is used to discover these paths, they are locally configurable by OS specific means.

Example

{

"addrs":

[

["192.168.0.1:4654", {"Krb5": "root/server@YOUR-DOMAIN"}]

],

"base": "/"

}

addrs

A list of pairs or ip:port and auth mechanism for each server in the cluster. Local should include the path to the local authentication socket file. Krb5 should include the server's spn.

base

The base path of this server cluster in the tree. This should correspond to the server cluster's parent, or "/" if it's parent is null.

default_auth

Optional. Specify the default authentication mechanism. May be one of

Anonymous, Local, Krb5, or Tls

tls

This is required only if using tls. Because netidx is a

distributed system, when in tls mode a subscriber may need to interact

with different organizations that don't necessarially trust each other enough

to share a certificate authority. That is why subscribers may be configured

with multiple identities. When connecting to another netidx entity a

subscriber will pick the identity that most closely matches the domain

of that entity. For example, in the below config, when connecting to

resolver.footraders.com the client will use the footraders.com identity.

When connecting to core.architect.com it will choose the architect.com

identity. When connecting to a-feed.marketdata.architect.com it would

choose the marketdata.architect.com identity.

When publishing, the default identity is used unless another identity is specified to the publisher.

"tls": {

"default_identity": "footraders.com",

"identities": {

"footraders.com": {

"trusted": "/home/joe/.config/netidx/footradersca.pem",

"certificate": "/home/joe/.config/netidx/footraders.crt",

"private_key": "/home/joe/.config/netidx/footraders.key"

},

"architect.com": {

"trusted": "/home/joe/.config/netidx/architectca.pem",

"certificate": "/home/joe/.config/netidx/architect.crt",

"private_key": "/home/joe/.config/netidx/architect.key"

},

"marketdata.architect.com": {

"trusted": "/home/joe/.config/netidx/architectca.pem",

"certificate": "/home/joe/.config/netidx/architectmd.crt",

"private_key": "/home/joe/.config/netidx/architectmd.key"

}

}

}

Managing TLS

Tls authentication requires a bit more care than even Kerberos. Here we'll go over a quick configuration using the openssl command line tool. I'll be using,

$ openssl version

OpenSSL 3.0.2 15 Mar 2022 (Library: OpenSSL 3.0.2 15 Mar 2022)

Setting Up a Local Certificate Authority

Unless you already have a corporate certificate authority, or you actually want to buy certificates for your netidx resolvers, publishers, and users from a commercial CA then you need to set up a certificate authority. This sounds like a big deal, but it's actually not. A CA is really just a certificate and accompanying private key that serves as the root of trust. That means that it is self signed, and people in your organization choose to trust it. It will then sign certificates for your users, publishers, and resolver servers, and they will be configured to trust certificates that it has signed.

openssl genrsa -aes256 -out ca.key 4096

This will generate the private key we will use for the local ca. This is the most important thing to keep secret. Use a strong password on it, and ideally keep it somewhere safe.

openssl req -new -key ./ca.key -x509 -sha512 -out ca.crt -days 7300 \

-subj "/CN=mycompany.com/C=US/ST=Some State/L=Some City/O=Some organization" \

-addext "basicConstraints=critical, CA:TRUE" \

-addext "subjectKeyIdentifier=hash" \

-addext "authorityKeyIdentifier=keyid:always, issuer:always" \

-addext "keyUsage=critical, cRLSign, digitalSignature, keyCertSign" \

-addext "subjectAltName=DNS:mycompany.com"

This will generate a certificate for the certificate authority and sign it with the private key.

The -addext flags add x509v3 attributes. Once this is complete we can view the certificate with

openssl x509 -text -in ca.crt

Generating User, Resolver, and Publisher Certificates

Now we can create certificates for various parts of the netidx system. Lets make one for the resolver server.

# generate the resolver server key. It must not be encrypted.

openssl genrsa -out resolver.key 4096

# generate a certificate signing request that will be signed our CA

openssl req -new -key ./resolver.key -sha512 -out resolver.req \

-subj "/CN=resolver.mycompany.com/C=US/ST=Some State/L=Some City/O=Some organization"

# sign the certificate request with the CA key and add restrictions to it using x509v3 extentions

openssl x509 -req -in ./resolver.req -CA ca.crt -CAkey ca.key \

-CAcreateserial -out resolver.crt -days 730 -extfile <(cat <<EOF

basicConstraints=critical, CA:FALSE

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid:always, issuer:always

keyUsage=nonRepudiation,digitalSignature,keyEncipherment

subjectAltName=DNS:resolver.mycompany.com

EOF

)

# check it

openssl verify -trusted ca.crt resolver.crt

This has one extra step, the generation of the request to be signed. If we were using a commercial certificate authority we would send this request to them and they would return the signed certificate to us. In this case it's just an extra file we can delete once we've signed the request.

The resolver server private key must not be encrypted, this is because it probably doesn't have any way to ask for a password on startup, since it's likely running on a headless server somewhere. So it's extra important to keep this certificate safe.

Generating user and publisher certificates is exactly the same as the above, except that they are

permitted to have password protected private keys. However if you do this, make sure there is an

askpass command configured, and that your system level keychain service is running and unlocked.

Once the password has been entered once, it will be added to the keychain and should not need to

be entered again.

It's possible to use the same certificate for multiple services, however it's probably not a great idea unless it's for multiple components of the same system (e.g. lots of publishers in a cluster), or if a user is testing a new publisher it can probably just use their certificate.

Distributing Certificates

With the configuration above you only need to distribute the CA certificate. Every netidx component that will participate needs to have a copy of it, and it needs to be configured as trusted in the client config, and the resolver server config.

Other components only need to have their own certificate, as well as their private key.

Authorization

When using the Kerberos, Local, or Tls auth mechanisms we also need to specify permissions in the cluster config file, e.g.

...

"perms": {

"/": {

"eric@RYU-OH.ORG": "swlpd"

},

"/solar": {

"svc_solar@RYU-OH.ORG": "pd"

}

}

In order to do the corresponding action in netidx a user must have that permission bit set. Permission bits are computed starting from the root proceeding down the tree to the node being acted on. The bits are accumulated on the way down. Each bit is represented by a 1 character symbolic tag, e.g.

- !: Deny, changes the meaning of the following bits to deny the corresponding permission instead of grant it. May only be the first character of the permission string.

- s: Subscribe

- w: Write

- l: List

- p: Publish

- d: Publish default

For example if I was subscribing to

/solar/stats/battery_sense_voltage we would walk down the path from

left to right and hit this permission first,

"/": {

"eric@RYU-OH.ORG": "swlpd"

},

This applies to a Kerberos principal "eric@RYU-OH.ORG", the resolver

server will check the user principal name of the user making the

request, and it will check all the groups that user is a member of,

and if any of those are "eric@RYU-OH.ORG" then it will or the

current permission set with "swlpd". In this case this gives me

permission to do anything I want in the whole tree (unless it is later

denied). Next we would hit,

"/solar": {

"svc_solar@RYU-OH.ORG": "pd"

}

Which doesn't apply to me, and so would be ignored, and since there

are no more permissions entries my effective permissions at

/solar/stats/battery_sense_voltage are "swlpd", and so I would be

allowed to subscribe.

Suppose however I changed the above entry,

"/solar": {

"svc_solar@RYU-OH.ORG": "pd",

"eric@RYU-OH.ORG": "!swl",

}

Now, in our walk, when we arrived at /solar, we would find an entry

that matches me, and we would remove the permission bits s, w, and l,

leaving our effective permissions at

/solar/stats/battery_sense_voltage as "pd". Since that doesn't give

me the right to subscribe my request would be denied. We could also do

this by group.

"/solar": {

"svc_solar@RYU-OH.ORG": "pd",

"RYU-OH\domain admins": "!swl",

}

As you would expect, this deny permission will still apply to me because I am a member of the domain admins group. If I am a member of two groups, and both groups have different bits denied, then all of them would be removed. e.g.

"/solar": {

"svc_solar@RYU-OH.ORG": "pd",

"RYU-OH\domain admins": "!swl",

"RYU-OH\enterprise admins": "!pd",

}

Now my effective permissions under /solar are empty, I can do

nothing. If I am a member of more than one group, and one denies

permissions that the other grants the deny always takes precidence.

Each server cluster is completely independent for permissions. If for example this cluster had a child cluster, the administrators of that cluster would be responsible for deciding it's permissions map.

Anonymous

It's possible to give anonymous users permissions even on a Kerberos or Local auth mechanism system, and this could allow them to use whatever functions you deem non sensitive, subject to some limitations. There is no encryption. There is no tamper protection. There is no publisher -> subscriber authentication. Anonymous users can't subscribe to non anonymous publishers. Non anonymous users can't subscribe to anonymous publishers. You name anonymous "" in the permissions file, e.g.

"/tmp": {

"": "swlpd"

}

Now /tmp is an anonymous free for all. If you have Kerberos

deployed, it's probably not that useful to build such a hybrid system,

because any anonymous publishers would not be usable by kerberos

enabled users. However it might be useful if you have embedded systems

that can't use kerberos, and you don't want to build a separate

resolver server infrastructure for them.

Groups

You'll might have noticed I'm using AD style group names above, that's

because my example setup uses Samba in ADS mode so I can test windows

and unix clients on the same domain. The most important thing about

the fact that I'm using Samba ADS and thus have the group names I have

is that it doesn't matter. Groups are just strings to netidx, for a

given user, whatever the id command would spit out for that user is

what it's going to use for the set of groups the user is in (so that

better match what's in your permissions file). You need to set up the

resolver server machines such that they can properly resolve the set

of groups every user who might use netidx is in.

Luckily you only need to get this right on the machines that run resolver servers, because that's the only place group resolution happens in netidx. You're other client and server machines can be as screwed up and inconsistent as you want, as long as the resolver server machine agrees that I'm a member of "RYU-OH\domain admins" then whatever permissions assigned to that group in the permission file will apply to me.

All the non resolver server machines need to be able to do is get

Kerberos tickets. You don't even need to set them up to use Kerberos

for authentication (but I highly recommend it, unless you really hate

your users), you can just force people to type kinit foo@BAR.COM

every 8 hours if you like.

Running the Resolver Server

As of this writing the resolver server only runs on Unix, and has only

been extensively tested on Linux. There's no reason it couldn't run on

Windows, it's just a matter of some work around group name resolution

and service integration. Starting a resolver server is done from the

netidx command line tool (cargo install netidx-tools). e.g.

$ KRB5_KTNAME=FILE:/path/to/keytab \

netidx resolver-server -c resolver.json

By default the server will daemonize, include -f to prevent that. If

your cluster has multiple replica servers then you must pass --id <index> to specify which one you are starting, however since the

default is 0 you can omit the id argument in the case where you only

have 1 replica.

You can test that it's working by running,

$ netidx resolver list /

Which should print nothing (since you have nothing published), but should not error, and should run quickly. You can use the command line publisher and subscriber to further test. In my case I can do,

[eric@blackbird ~]$ netidx publisher \

--bind 192.168.0.0/24 \

--spn host/blackbird.ryu-oh.org@RYU-OH.ORG <<EOF

/test|string|hello world

EOF

and then I can subscribe using

[eric@blackbird ~]$ netidx subscriber /test

/test|string|hello world

you'll need to make sure you have permission, that you have a keytab you can read with that spn in it, and that the service principal exists etc. You may need to, for example, run the publisher and/or resolver server with

KRB5_KTNAME=FILE:/somewhere/keytabs/live/krb5.keytab

KRB5_TRACE=/dev/stderr can be useful in debugging kerberos issues.

Listener Check

The listener check is an extra security measure that is intended to prevent an authenticated user from denying service to another publisher by overwriting it's session. When a client connects to the resolver server for write, and kerberos, tls, or local auth is enabled, then after authentication the resolver server encrypts a challenge using the newly created session. It then connects to the write address proposed by this new publisher and presents the challenge, which the publisher must answer correctly, otherwise the old session will be kept, and the new client will be disconnected. So in order to publish at a given address you must,

- Be a valid user

- Actually be listening on the write address you propose to use for publishing. And the write address must be routable from the resolver server's position on the network.

- Have permission to publish where you want to publish.

Why is the listener check important?

Since connecting to the resolver as a publisher can be done by any user who can authenticate to the resolver, and since the address and port a publisher is going to insert into the resolver server as their address is just part of the hello message, without some kind of check anyone on your network could figure out the address of an important publisher, then connect to the resolver server and say they are that publisher address, even if they don't have permission to publish. There are several implications.

- publishers on different network segments that might share ip addresses can't use the same resolver server.

- the resolver must be able to route back to every publisher, and also it must be able to actually connect. For example your firewall must allow connections both ways.

Subscription Flow

Sometimes debugging problems requires a more detailed understanding of exactly what steps are involved in a subscription.

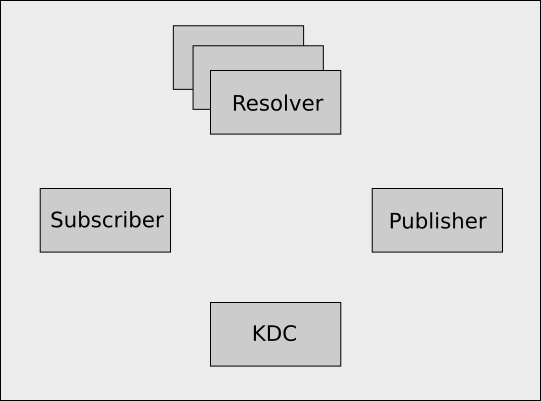

Components

In the full kerberos enabled version of netidx the following components are involved.

- The Kerberos 5 KDC (Key Distribution Center). e.g. The AD Domain Controller.

- Resolver Cluster, holds the path of everything published and the address of the publisher publishing it.

- Subscriber

- Publisher, holds the actual data, and has previously told the resolver server about the path of all the data it has.

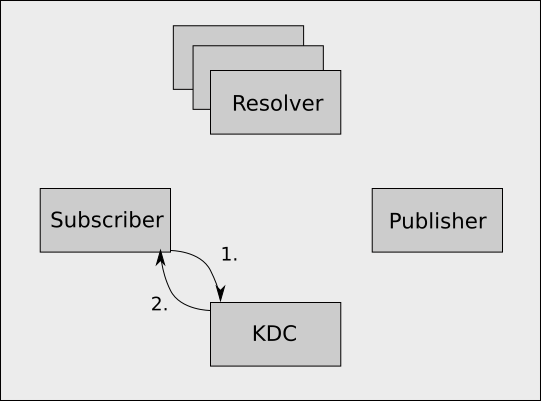

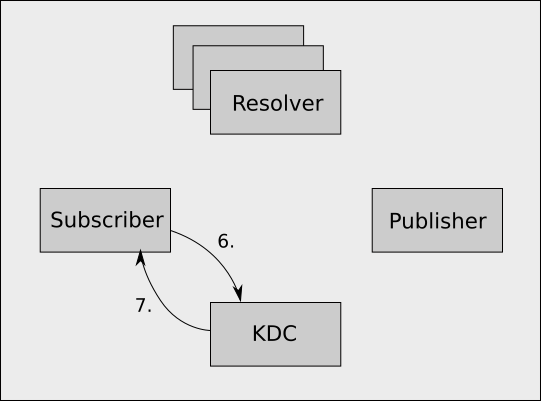

Step 1

- The Subscriber asks the KDC for a service ticket to talk to the Resolver Cluster. Note this only happens once for each user for some amount of time (usually hours), after which the service ticket is cached. The subscriber proves it's identity to the KDC using it's TGT.

- The KDC, having checked the validity of the subscriber's identity, generates a service ticket for the resolver server cluster. NOTE, Kerberos does not make authorization decisions, it merely allows entities to prove to each other that they are who they claim to be.

Step 2

- The Subscriber uses the service ticket to establish an encrypted GSSAPI session with the Resolver Cluster.

- Using the session it just established sends a resolve request for the paths it wants to subscribe to. All traffic is encrypted using the session.

- The Resolver Cluster verifies the presented GSSAPI token and

establishes a secure session, looks up the requested paths, and

returns a number of things to the subscriber for each path.

- The addresses of all the publishers who are publishing that path

- The service principal names of those publishers

- The permissions the subscriber has to the path

- The authorization token, which is a SHA512 hash of the concatenation of

- A secret shared by the Resolver Cluster and the Publisher

- The path

- The permissions

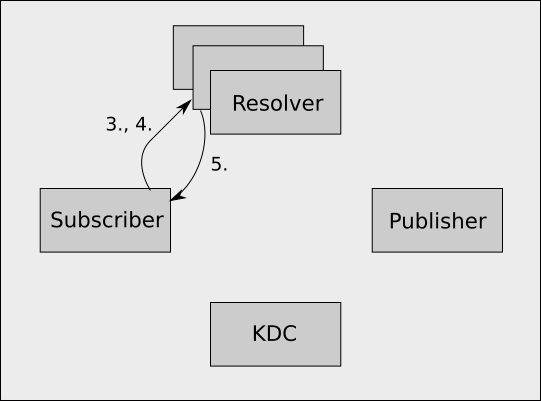

Step 3

- The subscriber picks a random publisher from the set of publishers publishing the path it wants, and requests a service ticket for that publisher's SPN from the KDC.

- The KDC validates the subscriber's TGT and returns a service ticket for the requested SPN, which will be cached going forward (usually for several hours).

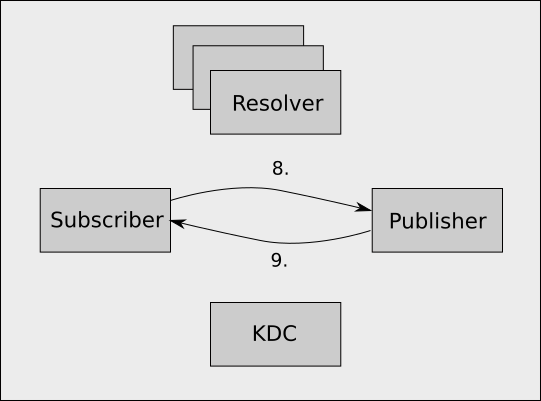

Step 4

-

The subscriber uses the service ticket it just obtained to establish an encrypted GSSAPI session with the publisher, and using this session it sends a subscribe request, which consists of,

- The path it wants to subscribe to

- The permissions the resolver cluster gave to it

- The authorization token

-

The publisher validates the subscriber's GSSAPI token and establishes an encrypted session, and then reads the subscribe request. It looks up the request path, and assuming it is publishing that path, it constructs a SHA512 hash value of,

- The secret it shared with the resolver cluster when it initially published the path.

- The path the subscriber is requesting

- The permissions the subscriber claims to have

It then checks that it's constructed auth token matches the one the subscriber presented. Since the subscriber does not know the secret the publisher shared with the resolver server it is computationally infeasible for the subscriber to generate a valid hash value for an arbitrary path or permissions, therefore checking this hash is an effective proof that the resolver cluster really gave the subscriber the permissions it is claiming to have.

Assuming all the authentication and authorization checks out, and the publisher actually publishes the requested value, it sends the current value back to the publisher along with the ID of the subscription.

Whenever the value changes the publisher sends the new value along with the ID of the subscription to the publisher (encrypted using the GSSAPI session, and over the same TCP session that was established earlier).

Other Authentication Methods

In the case of Tls and Local authentication, the subscription flow is similar, it just doesn't involve the KDC. Under local authentication, the resolver server is listening on a unix domain socket at an agreed upon path. The client connects to the socket, and the server is able to determine the local user on the other end. It then sends the client a token that it can use to make requests. From step 2 on local auth is more or less the same as kerberos.

Tls is very similar as well, except instead of a kdc, it's doing a TLS handshake. If the certificates check out, then from step 2 on, it's pretty much identical to kerberos.

In the case of anonymous authentication it's just a simple matter of look up the address from the resolver, and then subscribe to the publisher. All the data goes in the clear.

Fault Tolerance

As a system netidx depends on fault tolerant strategies in the subscriber, publisher, and resolver server in order to minimize downtime caused by a failure. Before I talk about the specific strategies used by each component I want to give a short taxonomy of faults as I think of them so we can be clear about what I'm actually talking about.

- Hang: Where a component of the system is not 'dead', e.g. the

process is still running, but is no longer responding, or is so slow

it may as well not be responding. IMHO this is the worst kind of

failure. It can happen at many different layers, e.g.

- You can simulate a hang by sending SIGSTOP to a unix process. It isn't dead, but it also won't do anything.

- A machine with a broken network card, such that most packets are rejected due to checksum errors, it's still on the network, but it's effective bandwidth is a tiny fraction of what it should be.

- A software bug causing a deadlock

- A malfunctioning IO device

- Crash: A process or the machine it's running on crashes cleanly and completely.

- Bug: A semantic bug in the system that causes an effective end to service.

- Misconfiguration: An error in the configuration of the system that

causes it not to work. e.g.

- Resolver server addresses that are routeable by some clients and not others

- Wrong Kerberos SPNs

- Misconfigured Kerberos

- Bad tls certificates

Subscriber & Publisher

-

Hang: Most hang situations are solved by heartbeats. Publisher sends a heartbeat to every subscriber that is connected to it every 5 seconds. Subscriber disconnects if it doesn't reveive at least 1 message every 100 seconds.

Once a hang is detected it is dealt with by disconnecting, and it essentially becomes a crash.

The hang case that heartbeats don't solve is when data is flowing, but not fast enough. This could have multiple causes e.g. the subscriber is too slow, the publisher is too slow, or the link between them is too slow. Whatever the cause, the publisher can handle this condition by providing a timeout to it's

flushfunction. This will cause any subscriber that can't consume the flushed batch within the specified timeout to be disconnected. -

Crash: Subscriber allows the library user to decide how to deal with a publisher crash. If the lower level

subscribefunction is used then on being disconnected unexpecetedly by the publisher all subscriptions are notified and marked as dead. The library user is free to retry. The library user could also usedurable_subscribewhich will dilligently keep trying to resubscribe, with linear backoff, until it is successful. Regardless of whether you retry manually or usedurable_subscribeeach retry will go through the entire process again, so it will eventually try all the publishers publishing a value, and it will pick up any new publishers that appear in the resolver server.

Resolver

- Hang: Resolver clients deal with a resolver server hang with a dynamically computed timeout based on the number of requests in the batch. The rule is, minimum timeout 15 seconds or 50 microseconds per simple operation in the batch for reads (longer for complex read ops like list matching) or 100 microseconds per simple operation in the batch for writes, whichever is longer. That timeout is a timeout to get an answer, not to finish the batch. Since the resolver server breaks large batches up into smaller ones, and answers each micro batch when it's done, the timeout should not usually be hit if the resolver is just very busy, since it will be sending back something periodically for each micro batch. The intent is for the timeout to trigger if the resolver is really hanging.

- Crash: Resolver clients deal with crashes differently depending on

whether they are read or write connections.

- Read Connections (Subscriber): Abandon the current connection, wait a random

time between 1 and 12 seconds, and then go through the whole

connection process again. That roughly entails taking the list of

all servers, permuting it, and then connecting to each server in

the list until one of them answers, says a proper hello, and

successfully authenticates (if authentication is on). For each batch a

resolver client will do this abandon and reconnect dance 3 times,

and then it will give up and return an error for that

batch. Subsuquent batches will start over from the beginning. In a

nutshell read clients will,

- try every server 3 times in a random order

- only give up on a batch if every server is down or unable to answer

- remain in a good state to try new batches even if previous batches have failed

- Write Connections (Publishers): Since write connections are

responsible for replicating their data out to each resolver server

they don't include some of the retry logic used in the read

client. They do try to replicate each batch 3 times seperated by a

1-12 second pause to each server in the cluster. If after 3 tries

they still can't write to one of the servers then it is marked as

degraded. The write client will try to replicate to a degraded

server again at each heartbeat interval. In a nutshell write

clients,

- try 3 times to write to each server

- try failed servers again each 1/2

writer_ttl - never fail a batch, just log an error and keep trying

- Read Connections (Subscriber): Abandon the current connection, wait a random

time between 1 and 12 seconds, and then go through the whole

connection process again. That roughly entails taking the list of

all servers, permuting it, and then connecting to each server in

the list until one of them answers, says a proper hello, and

successfully authenticates (if authentication is on). For each batch a

resolver client will do this abandon and reconnect dance 3 times,

and then it will give up and return an error for that

batch. Subsuquent batches will start over from the beginning. In a

nutshell read clients will,

One important consequence of the write client behavior is that in the

event all the resolver servers crash, when they come back up

publishers will republish everything after a maximum of 1/2

writer_ttl has elapsed.

Command Line Tools

You don't need to program to use netidx, it comes with a set of useful command line tools!

Command Line Publisher

The command line publisher allows you to publish values to netidx from stdin. The format of a published value is pipe separated, and newline delimited. e.g.

/foo/bar|u32|42

The three fields are,

- The path

- The type

- The value

or the special form

- The path

null

or the special form

- DROP

- the path

e.g. DROP|/foo/bar stops publishing /foo/bar

or the special form

- WRITE

- the path

e.g. WRITE|/foo/bar

enables writing to /foo/bar, and publishes it as null if it was

not already published. Written values will be sent to stdout in the

same format as is written by subscriber.

If you want to publish to a path that has a | character in it then

you must escape the | with \, e.g. \|. If you want to publish a

path that has a \ in it, then you must also escape it,

e.g. \\. e.g.

/this/path/has\|pipes\|and\\in-it|string|pipe and backslash everywhere

Arguments

There are several command line options to the netidx publisher command,

-b, --bind: optional, specify the network address to bind to. This can be specified in three forms.- an expression consisting of an ip/netmask that must match a unique

network interface on the machine running the publisher. This is

prefered, e.g.

- local, selects 127.0.0.1/24

- 10.0.0.0/8 selects the interface bound to a 10.x.x.x address

- 192.168.0.0/16 selects the interface bound to a 192.168.x.x address

- The publisher will choose a free port automatically starting at 5000

- if you must specify an exact address and port e.g.

- 127.0.0.1:5000

- 127.0.0.1:0, in which case the OS will choose the port at random, depending on the OS/libc this may pick an ephemeral port, so be careful.

- a public ip followed by the first or second forms for the internal bind ip.

Use this if you are running publishers behind a NAT (e.g. aws elastic ips)

- 54.32.223.1@172.31.0.0/16 will bind to any interface matching 172.31.0.0, but will advertise it's address to the resolver as 54.32.223.1.

- 54.32.224.1@0.0.0.0/32 will bind to every interface on the local machine but will advertise it's address to the resolver as 54.32.223.1.

- 54.32.224.1:5001@172.31.23.234:5001 will bind to 172.31.23.234 on port 5001 but will advertise it's address to the resolver as 54.32.224.1:5001. This would correspond to a typical single port forward NAT situation.

- an expression consisting of an ip/netmask that must match a unique

network interface on the machine running the publisher. This is

prefered, e.g.

-a, --auth: optional, specifies the authentication mechanism, anonymous, local, or krb5.--spn: optional, required if -a krb5, the service principal name the publisher should run as. This principal must have permission to publish where you plan to publish, must exist in your krb5 infrastructure, and you must have access to a keytab with it's credentials. If that keytab is in a non standard location then you must set the environment variableKRB5_KTNAME=FILE:/the/path/to/the/keytab--upn: optional, if you want to authenticate the publisher to the resolver server as a prinicpal other than the logged in user then you can specify that principal here. You must have a TGT for the specified principal.--identity: optional, the tls identity to use for publishing.--timeout <seconds>: optional, if specified requires subscribers to consume published values within the specified number of seconds or be disconnected. By default the publisher will wait forever for a subscriber to consume an update, and as a result could consume an unbounded amount of memory.

Behavior

When started the publisher runs until killed, it reads lines from

stdin as long as stdin remains open, and attempts to parse them as

PATH|TYPE|VALUE triples. If parsing fails, it prints an error to

stderr and continues reading. If parsing succeeds it checks if it has

already published PATH, if not, it publishes it with the specified

type and value, if it has, then it updates the existing published

value. It is not an error to change the type of an existing published

value. If stdin is closed publisher does not stop, however it is no

longer possible to update existing published values, or publish new

values without restarting it.

Limitations

The command line publisher cannot be a default publisher.

Environment Variables

In addition to all the krb5 environment variables, the command line

publisher uses envlogger, and so will respond to RUST_LOG,

e.g. RUST_LOG=debug will cause the publisher to print debug and

higher priority messages to stderr.

Types

The following types are supported,

u32: unsigned 32 bit integer, 4 bytes on the wirev32: unsigned 32 bit integer LEB128 encoded, 1-5 bytes on the wire depending on how big the number is. e.g. 0-128 is just 1 bytei32: signed 32 bit integer, 4 bytes on the wirez32: signed 32 bit integer LEB128 encoded 1-5 bytes on the wireu64: unsigned 64 bit integer, 8 bytes on the wirev64: unsigned 64 bit integer LEB128 encoded, 1-10 bytes on the wirei64: signed 64 bit integer, 8 bytes on the wirez64: signed 64 bit integer LEB128 encoded, 1-10 bytes on the wiref32: 32 bit single precision floating point number, 4 bytes on the wiref64: 64 bit double precision floating point number, 8 bytes on the wiredatetime: a date + time encoded as an i64 timestamp representing the number of seconds since jan 1 1970 UTC and a u32 number of sub second nanoseconds fixing the exact point in time. 12 bytes on the wireduration: a duration encoded as a u64 number of seconds plus a u32 number of sub second nanoseconds fixing the exact duration. 12 bytes on the wirebool: true, or false. 1 byte on the wirestring: a unicode string, limited to 1 GB in length. Consuming 1-10 + number of bytes in the string on the wire (the length is LEB128 encoded)bytes: a byte array, limited to 1 GB in length, Consuming 1-10 + number of bytes in the array on the wirearray: an array of netidx values, consuming 1+zlen(array)+sum(len(elts))result: OK, or Error + string, consuming 1-1+string length bytes

Command Line Subscriber

The command line subscriber allows you to subscribe to values in

netidx. You can either specify a list of paths you want to subscribe

to on the command line, or via commands sent to stdin. Once subscribed

a line in the form PATH|TYPE|VALUE will be printed for every update

to a subscribed value, including the initial value. e.g. on my local

network I can get the battery voltage of my solar array by typing,

netidx subscriber /solar/stats/battery_sense_voltage

/solar/stats/battery_sense_voltage|f32|26.796875

Directives via stdin

The command line subscriber reads commands from stdin which can direct it to,

- subscribe to a new path

ADD|/path/to/thing/you/want/to/add

- end a subscription

DROP|/path/to/thing/you/want/to/drop

- write a value to a subscribed path

WRITE|/path/to/thing/you/want/to/write|TYPE|VALUE- if the path you are writing to has a

|in it, then you must escape it, e.g.\|. If it has a literal\in it, then you also must escape it e.g.\\.

- call a netidx rpc

CALL|/path/to/the/rpc|arg=typ:val,...,arg=typ:val- commas in the val may be escaped with

\ - args may be specified multiple times

If the subscriber doesn't recognize a command it will print an error to stderr and continue reading commands. If stdin is closed subscriber will not quit, but it will no longer be possible to issue commands.

Arguments

-

-o, --oneshot: Causes subscriber to subscribe to each requested path, get one value, and then unsubscribe. In oneshot mode, if all requested subscriptions have been processed, and either stdin is closed, or-n, --no-stdinwas also specified, then subscriber will exit. e.g.netidx subscriber -no /solar/stats/battery_sense_voltageWill subscribe to

/solar/stats/battery_sense_voltage, print out the current value, and then exit. -

-n, --no-stdin: Do not read commands from stdin, only subscribe to paths passed on the command line. In this mode it is not possible to unsubscribe, write, or add new subscriptions after the program starts. -

-r, --raw: Do not print the path and type, just the value.-noris useful for printing the value of one or more paths to stdout and then exiting. -

-t, --subscribe-timeout: Instead of retrying failed subscriptions forever, only retry them for the specified number of seconds, after that remove them, and possibly exit if-o, --oneshotwas also specified.

Notes

The format subscriber writes to stdout is compatible with the format the publisher reads (unless -r is specified). This is by design, to make applications that subscribe, manipulate, and republish data easy to write.

Resolver Command Line Tool

The resolver command line tool allows you to query and update the resolver server from the command line. There are several kinds of querys/manipulations it can perform,

list: list entries matching a specified patterntable: query the table descriptor for a pathresolve: see what a path resolves toadd: add a new entryremove: remove an entry

List

netidx resolver list /solar/stats/*

This sub command allows listing items in the resolver server that

match a specific pattern. It supports the full unix glob pattern set,

including ** meaning any number of intermediate parents, and

{thing1, thing2, thing3, ..} for specifying specific sets. e.g. on

my machine I can get all the names under the /solar namespace that

begin with battery with the following query,

$ netidx resolver list /solar/**/battery*

/solar/settings/battery_charge_current_limit

/solar/stats/battery_v_max_daily

/solar/stats/battery_current_net

/solar/stats/battery_voltage_slow

/solar/stats/battery_voltage_settings_multiplier

/solar/stats/battery_sense_voltage

/solar/stats/battery_v_min_daily

/solar/stats/battery_temperature

/solar/stats/battery_terminal_voltage

Args

List supports several arguments,

-n, --no-structure: don't list matching items that are structural only. Only list items that are actually published.-w, --watch: don't quit, instead wait for new items matching the pattern and print them out as they appear.

Table

This prints out the table desciptor for a path, which can tell you how a given path will look in the browser by default. An example 10 row 5 column table generated by the stress publisher looks like this,

$ netidx resolver table /bench

columns:

2: 10

0: 10

1: 10

4: 10

3: 10

rows:

/bench/3

/bench/5

/bench/6

/bench/9

/bench/8

/bench/7

/bench/2

/bench/4

/bench/1

/bench/0

in the columns section, the number after the column name is the number of rows in the table that have that column. Since this table is fully populated every column is associated with 10 rows.

Resolve

Given a path(s) this prints out all the information the resolver server has about that path. This is what the subscriber uses to connect to a publisher, and as such this tool is useful for debugging subscription failures. Using the same stress publisher we saw above we can query one cell in the table.

[eric@blackbird ~]$ netidx resolver resolve /local/bench/0/0

publisher: Publisher { resolver: 127.0.0.1:4564, id: PublisherId(3), addr: 127.0.0.1:5011, hash_method: Sha3_512, target_auth: Local }

PublisherId(3)

First all the publisher records are printed. This is the full information about all the publishers that publish the requested paths. Then, a list of publisher ids is printed, this is the publisher id that corresponds to each path in the order the path was specified. If we asked for two paths under the /local/bench namespace then we will see how this works.

[eric@blackbird ~]$ netidx resolver resolve /local/bench/0/0 /local/bench/0/1

publisher: Publisher { resolver: 127.0.0.1:4564, id: PublisherId(3), addr: 127.0.0.1:5011, hash_method: Sha3_512, target_auth: Local }

PublisherId(3)

PublisherId(3)

Here we can clearly see that the same publisher (publisher id 3) is the one to contact about both requested paths. This output closely mirrors how the actual information is sent on the wire (except on the wire it's binary, and the ids are varints). If multiple publishers published any of the requested paths, their ids would appear on the same line separated by commas.

In the case that nothing is publishing the requested path then the tool will print nothing and exit.

Add

This is a low level debugging tool, and it's really not recommended unless you know exactly what you're doing. Using it could screw up subscriptions to whatever path you add for some time. That said, it's pretty simple,

netidx resolver add /path/to/thing 192.168.0.5:5003

This entry will time out after a while because no publisher is there to send heartbeats for it.

Note this will not work if your system is kerberos enabled, because the resolver server checks that the publisher is actually listening on the address it claims to be listening on, and that obviously can't work in this case.

Remove

This is actually worse than add in terms of danger, because you can remove published things without the publisher knowing you did it, and as a result you can make subscriptions fail until the publisher is restarted. It also doesn't work if you are using kerberos, so that's something.

netidx resolver remove /path/to/thing 192.168.0.5:5003`

Recorder

The recorder allows you to subscribe to a set of paths defined by one or more globs and write down their values in a file with a compact binary format. Moreover, at the same time it can make the contents of an archive available for playback by multiple simultaneous client sessions, each with a potentially different start time, playback speed, end time, and position.

It's possible to set up a recorder to both record data and play it back at the same time, or only record, or only play back. It is not possible to set up one recorder to record, and another to play back the same file, however recording and playback are careful not to interfere with each other, so the only limitation should be the underlying IO device and the number of processor cores available.

Args

--example: optional, print an example configuration file--config: required, path to the recorder config file

Configuration

e.g.

{

"archive_directory": "/foo/bar",

"archive_cmds": {

"list": [

"cmd_to_list_dates_in_archive",

[]

],

"get": [

"cmd_to_fetch_file_from_archive",

[]

],

"put": [

"cmd_to_put_file_into_archive",

[]

]

},

"netidx_config": null,

"desired_auth": null,

"record": {

"spec": [

"/tmp/**"

],

"poll_interval": {

"secs": 5,

"nanos": 0

},

"image_frequency": 67108864,

"flush_frequency": 65534,

"flush_interval": {

"secs": 30,

"nanos": 0

},

"rotate_interval": {

"secs": 86400,

"nanos": 0

}

},

"publish": {

"base": "/archive",

"bind": null,

"max_sessions": 512,

"max_sessions_per_client": 64,

"shards": 0

}

}

archive_directory: The directory where archive files will be written. The archive currently being written iscurrentand previous rotated files are named the rfc3339 timestamp when they ended.archive_commands: These are shell hooks that are run when various events happenlist: Shell hook to list available historical archive files. This will be combined with the set of timestamped files inarchive_directoryto form the full set of available archive files.get: Shell hook that is run before an archive file needs to be accessed. It will be accessed just after this command returns. This can, for example, move the file into place after fetching it from long term storage. It is passed the name of the file the archiver would like, which will be in the union of the local files and the set returned by list.pub: Shell hook that is run just after the current file is rotated. Could, for example, back the newly rotated file up, or move it to long term storage.

netidx_config: Optional path to the netidx config. Omit to use the default.desired_auth: Optional desired authentication mechanism. Omit to use the default.record: Section of the config used to record, omit to only play back.spec: a list of globs describing what to record. If multiple globs are specified and they overlap, the overlapped items will only be archived once.poll_interval: How often, in seconds, to poll the resolver server for changes to the specified glob set. 0 never poll, if omitted, the default is 5 seconds.image_frequency: How often, in bytes, to write a full image of every current value, even if it did not update. Writing images increases the file size, but makes seeking to an arbitrary position in the archive much faster. 0 to disable images, in which case a seek back will read all the data before the requested position, default 64MiB.flush_frequency: How much data to write before flushing to disk, in pages, where a page is a filesystem page. default 65534. This is the maximum amount of data you will probably lose in a power outage, system crash, or program crash. The recorder uses two phase commits to the archive file to ensure that partially written data does not corrupt the file.flush_interval: How long in seconds to wait before flushing data to disk even ifflush_frequencypages was not yet written. 0 to disable, default if omitted 30 seconds.rotate_interval: How long in seconds to wait before rotating the current archive file. Default if omitted, never rotate.

publish: Section of the config file to enable publishingbase: The base path to publish atbind: The bind config to use. Omit to use the default.max_sessions: The maximum total number of replay sessions concurrently in progress.max_sessions_per_client: The maximum number of replay sessions in progress for any single client.shards: The number of recorder shards to expect. If you want to record/playback a huge namespace, or one that updates a lot, it may not be possible to use just one computer. The recorder supports sharding across an arbitrary number of processes for both recording and playback. n is the number of shards that are expected in a given cluster. playback will not be avaliable until all the shards have appeared and synced with each other, however recording will begin immediatly. default if omitted 0 (meaning just one recorder).

Using Playback Sessions

When initially started for playback or mixed operation the recorder

publishes only some cluster information, and a netidx rpc called

session under the publish-base. Calling the session rpc will

create a new session, and return the session id. Then it will publish

the actual playback session under publish-base/session-id. A

playback session consists of two sub directories, control contains

readable/writable values that control the session, and data contains

the actual data.

Creating a New Session

It's simple to call a netidx rpc with command line tools, the browser,

or programatically. To create a new playback session with default

values just write null to publish-base/session. e.g.

netidx subscriber <<EOF

WRITE|/solar/archive/session|string|null

EOF

/solar/archive/session|string|ef93a9dce21f40c49f5888e64964f93f

We just created a new playback session called ef93a9dce21f40c49f5888e64964f93f, we can see that the recorder published some new things there,

$ netidx resolver list /solar/archive/ef93a9dce21f40c49f5888e64964f93f/*

/solar/archive/ef93a9dce21f40c49f5888e64964f93f/data

/solar/archive/ef93a9dce21f40c49f5888e64964f93f/cluster

/solar/archive/ef93a9dce21f40c49f5888e64964f93f/control

If we want to pass some arguments to the rpc so our session will be setup how we like by default we can do that as well, e.g.

netidx subscriber <<EOF

CALL|/solar/archive/session|start="-3d",speed=2

EOF

CALLED|/archive/session|"ef93a9dce21f40c49f5888e64964f93f"

Now our new session would be setup to start 3 days ago, and playback at 2x speed.

Playback Controls

Once we've created a new session the recorder publishes some controls under the control directory. The five controls both tell you the state of the playback session, and allow you to control it. They are,

start: The timestamp you want playback to start at, or Unbounded for the beginning of the archive. This will always display Unbounded, or a timestamp, but it in addition to those two values it accepts writes in the form of offsets from the current time, e.g. -3d would set the start to 3 days ago. It accepts offsets [+-]N[yMdhms] where N is a number. y - years, M - months, d - days, h - hours, m - minutes, s - seconds.end: Just like start except that Unbounded, or a time in the future means that when playback reaches the end of the archive it will switch mode to tail. In tail mode it will just repeat data as it comes in. In the case that end is in the future, but not unbounded, it will stop when the future time is reached.pos: The current position, always displayed as a timestamp unless there is no data in the archive. Pos accepts writes in the form of timestamps, offsets from the current time (like start and end), and [+-]1-128 batches. e.g. -10 would seek back exactly 10 update batches, +100 would seek forward exactly 100 update batches.speed: The playback speed as a fraction of real time, or Unlimited. In the case of Unlimited the archive is played as fast as it can be read, encoded, and sent. Otherwise the recorder tries to play back the archive at aproximately the specified fraction of real time. This will not be perfect, because timing things on computers is hard, but it tries to come close.state: this is either play, pause or tail, and it accepts writes of any state and will change to the requested state if possible.

Since the controls also include a small amount of documentation meant

to render as a table, the actual value that you read from/write to is

publish-base/session-id/control/name-of-control/current.

Data

Once the session is set up the data, whatever it may be, appears under

publish-base/data. Every path that ever appears in the archive is

published from the beginning, however, if at the current pos that

path didn't have a value, then it will be set to null. This is a

slightly unfortunate compromise, as it's not possible to tell the

difference between a path that wasn't available, and one that was

intentionally set to null. When you start the playback values will be

updated as they were recorded, including replicating the observed

batching.